💡 From Math to Ministries, AI’s Scope Keeps Expanding

AI use surges outside wealthy countries, Albania gives procurement to an AI minister, and a theorem once thought impossible is solved by code.

🎵 Podcast

Don’t feel like reading? Listen to it instead.

By the way, if you like the podcast. Please let me know (20 seconds).

📰 Latest News

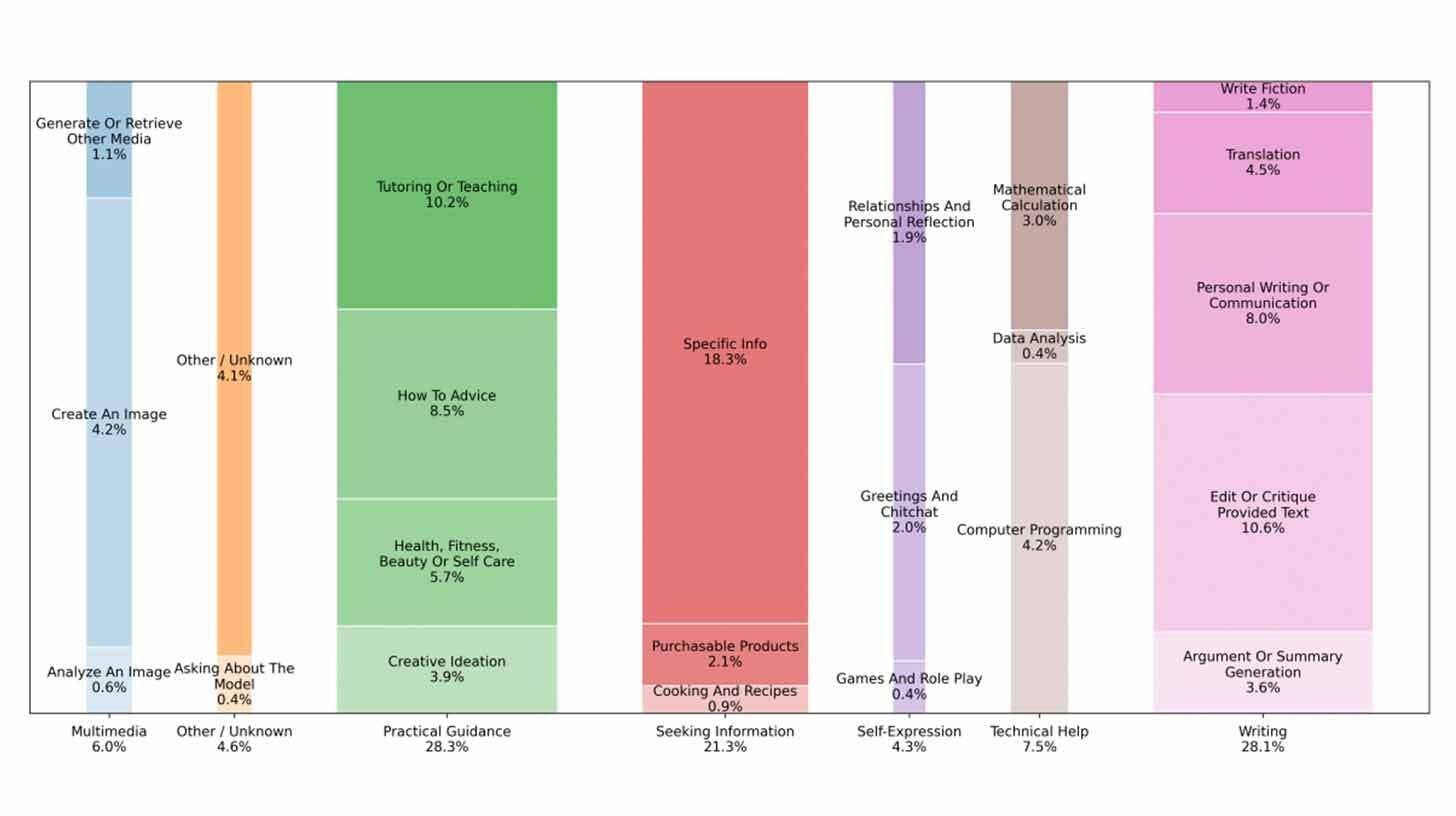

AI Goes Personal: 70% of ChatGPT Chats Are Now Non-Work

Personal use of AI has taken over. Non-work messages on ChatGPT rose from 53% in mid-2024 to more than 70% by mid-2025, and adoption in low and middle-income countries has grown over four times faster than in the richest economies. Anthropic’s latest data shows Claude usage is heavily coding-centred and that users are delegating more tasks, especially with its Claude Code agent.

Why it matters:

Product teams should optimise for advice and search, not only output generation. Developer workflows are shifting toward automation, so tools that handle end-to-end coding steps will gain ground while review and validation remain critical. Regional gaps matter for go-to-market plans, since growth is fastest where incomes are lower but usage breadth is narrower. Expect policy debates on digital inclusion to intensify as adoption spreads beyond wealthy markets.

Albania Appoints AI Minister to Run Procurement

Albania has appointed Diella, an AI-powered virtual assistant, as a cabinet-level “virtual minister” to oversee public procurement. Prime Minister Edi Rama says Diella will gradually take over awarding tenders from ministries to cut corruption and make the process more transparent. The rollout is described as step by step, with Diella assessing bids on merit and aiming for fully clear tender decisions.

Why it matters:

If implemented at scale, this shifts discretion from individual officials to a rules-driven system, which could reduce favouritism and bribery. It could also standardise procurement, speeding up awards and lowering opportunities for interference. The approach raises basic governance questions, for example who is accountable for errors and how bidders can appeal an automated decision. Other governments will watch closely to see if outcomes improve without new risks to fairness and oversight.

The Web Just Got a Licensing Layer for AI

Really Simple Licensing (RSL) is a new open web standard that lets sites publish machine-readable terms for how AI can use their content and how they should be paid. Early supporters include Reddit, Yahoo, Medium, Quora, Ziff Davis and others. RSL adds a licensing layer alongside robots.txt and supports models like attribution, pay-per-crawl and pay-per-inference. A nonprofit “RSL Collective” will manage optional collective licensing. It’s available for any publisher to adopt now.

Why it matters:

AI companies get a clear way to license the open web at scale, instead of one-off deals or scraping disputes. Publishers of all sizes can turn AI usage into revenue with simple metadata rather than complex negotiations, which could bring more high-quality content into training and inference while reducing legal fights. If adoption spreads, RSL could become a common payment and permission layer between the web and AI, shaping how models access, attribute, and compensate online content.

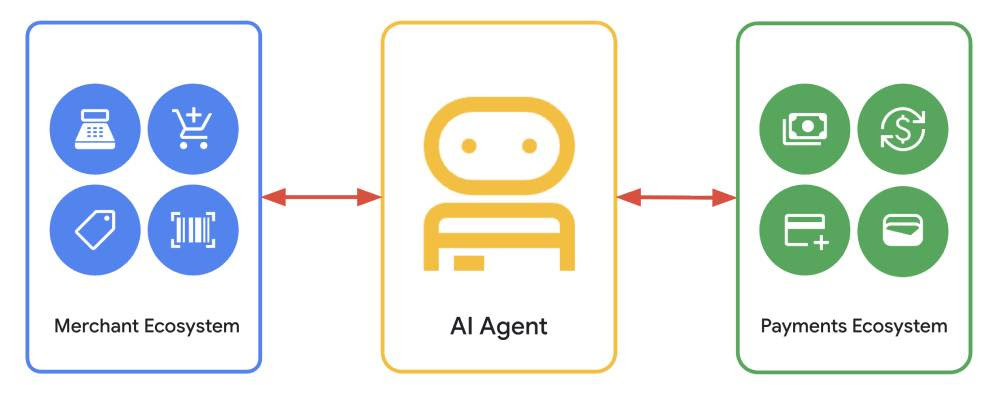

Google’s AP2 Lets AI Agents Shop for You

AI agents can now check out for you, using Google’s new Agent Payments Protocol. AP2 defines signed “mandates” that record a user’s intent, then a signed cart to authorise the purchase, creating an auditable trail. It supports cards, bank transfers, and stablecoins, and ships with open specs plus an A2A x402 crypto extension built with Coinbase. American Express, Mastercard, PayPal, Salesforce, Intuit and dozens more are on board.

Why it matters — This tackles the accountability gap when a non-human spends your money. Merchants get a standard way to verify an agent is acting on real user instructions, and to resolve disputes with a clear record. Product teams can build agentic shopping without bespoke risk plumbing for every partner. Regulators and auditors get a model that maps to existing controls, which should speed approvals and reduce integration friction.

From Years to Weeks: AI Proves Strong Prime Theorem

What took humans 18 months to attempt, Gauss did in 3 weeks. Math Inc’s autoformalisation agent produced a computer-checked Lean proof of the strong prime number theorem, a challenge set by Terence Tao and Alex Kontorovich. The effort generated about 25,000 lines of Lean and over 1,000 theorems, with experts providing the plan and reviewing key steps.

Why it matters:

This is a speed and rigour gain, not a new mathematical discovery. If agents can formalise hard analytic results at this pace, large proof projects could move from years to weeks, with fewer hidden errors. It lowers the barrier to formal verification for research groups and tool builders. Human guidance and checks still matter, but throughput is the headline change.

Chatbots Face First Big Child-Safety Test

US regulators just ordered seven chatbot makers to hand over their child-safety plans, and OpenAI is rolling out parental controls in ChatGPT that let parents link teen accounts, set limits, and receive distress alerts. The FTC’s compulsory Section 6(b) inquiry targets “companion” chatbots and asks firms to detail testing, safeguards, and disclosures for minors. OpenAI says the new controls are starting in select regions with wider availability to follow.

Why it matters:

Safety for teens is moving from blog promises to hard requirements. Expect tighter age checks, clearer warnings, and gating of risky role-play features, which raises design and moderation costs. Schools and families get more control, but products must balance safety with privacy when monitoring for distress or linking accounts. The FTC study is not an enforcement case, yet it sets the groundwork for future rules and actions.