🤫 Your Jaw Is a Keyboard, ⚖️ AI’s Legal Reckoning, 💡 Smarter Care for Seniors

MIT’s mind-to-machine interface could change how we interact. Google, OpenAI, and Anthropic all face rising legal and compute pressures.

🎵 Podcast

Don’t feel like reading? Listen to it instead.

By the way, if you like the podcast. Please let me know (20 seconds).

📰 Latest News

Speak Without Speaking: MIT’s AlterEgo Lets You Command Devices

AlterEgo is an MIT-born wearable that lets you “speak” to computers without making a sound. Electrodes on the jaw and face pick up tiny neuromuscular signals when you say words in your head, then an AI model turns those signals into text or commands on a phone or smart speaker. The research was developed at MIT Media Lab and is now being advanced by an MIT spinoff listed as Alterego Ai. It is still in early testing.

Why it matters:

Silent, hands-free input could help people who cannot use voice or keyboards, and it works in places where talking is awkward or noisy. It also points to a new class of private, always-available interfaces where computers respond to internal speech, not just taps or microphones. The idea has been shown in lab prototypes and public demos, but making it reliable in everyday life will require more validation, comfort improvements, and clear privacy rules about what signals are captured and when.

Prove Your Training Data: Judge Pushes Back, Anthropic Powers On

A proposed $1.5 billion settlement over Anthropic’s use of pirated books has hit a judicial snag. U.S. District Judge William Alsup declined to approve the deal as filed, citing fairness and transparency concerns and signalling he may let the case go to trial if fixes fall short. Earlier rulings in the case distinguished legally purchased books (potentially fair use) from illegally obtained copies at issue here.

Meanwhile, Anthropic closed a $13 billion Series F at a $183 billion valuation, extending its runway for Claude and Claude Code.

Separately, Microsoft is testing Anthropic models inside Microsoft 365, with reporting that Office could let customers use Claude alongside OpenAI—potentially even routing via AWS.

Why it matters:

The judge’s pushback is a big moment for AI training-data law: expect tougher “prove your provenance” standards, more opt-in licensing, and clearer notices to rightsholders. At the same time, Warner Bros’ new lawsuit against Midjourney shows entertainment companies are tightening the screws on unlicensed training and outputs, which will force stricter filters and more licensing across creative tools. If more courts follow, model builders face higher compliance costs and slower release cycles, while regulators gain cover to harden rules on data sourcing and transparency—potentially making US labs less competitive against jurisdictions with looser regimes (China primarily).

The fundraise keeps Anthropic in the front rank on model quality and enterprise features—but it also raises the bar for rivals who lack similar capital.

Microsoft trialling Claude inside Office points to a multi-model workplace: admins pick which engine powers which task (code, slides, spreadsheets), reducing single-vendor risk and letting teams optimise for cost, accuracy and policy. If this catches on more broadly, “choose your model” becomes table stakes across productivity app suites.

Voice AI Helps Seniors Manage Blood Pressure

Emory University has developed AI voice agents designed for senior citizens to monitor blood pressure at home. The system uses voice interaction, allowing users to report readings or receive reminders and guidance on blood pressure management. The technology aims to be accessible on standard smart speakers and smartphones. Details about launch dates or geographic availability have not been published in the available sources.

Why it Matters:

This technology can make routine blood pressure monitoring easier for older adults who may struggle with manual devices or mobile apps. Healthcare teams can receive more regular and accurate updates from patients, potentially lowering the need for in-person clinic visits. Cost reduction is expected by reducing hospital admissions linked to unmanaged blood pressure and by enabling more efficient remote patient monitoring. This approach could shift chronic care for seniors towards a more convenient, home-based model.

Google Keeps Chrome As AI Era Forces New Search Guardrails

A U.S. court’s 2025 remedy order in DOJ v. Google left Chrome inside Google, while imposing conduct rules instead of a breakup. The original case, filed in 2020, focused on Google’s default-search deals on Apple and Android and other tactics used to keep its general search monopoly. In shaping remedies, the court weighed how new AI tools like ChatGPT and Google’s own Gemini change search and even considered, then declined, a Chrome divestiture. Instead, the order requires easier default switching and data-sharing steps to open room for rivals.

Why it matters:

Antitrust is colliding with a market that is shifting under its feet. The judge found Google illegally maintained a search monopoly, but stopped short of breaking up Chrome, in part because generative-AI assistants are becoming new “access points” to information and is reshaping how people search. That makes the old browser-default playbook less decisive than it was in 2020, even as Google still holds powerful distribution advantages. For users and developers, the order aims to loosen Google’s grip by making defaults easier to change and by forcing more openness that could help AI assistants compete. For Google, it keeps Chrome and search together but puts guardrails on how the company ties AI and search inside its products. And in the background, market pressure from AI challengers is mounting, with analysts warning that ChatGPT-style tools and other channels could chip away at Google’s search and ads stronghold over time.

OpenAI’s Chip Play: Escape Nvidia, Drop Costs, Scale Fast

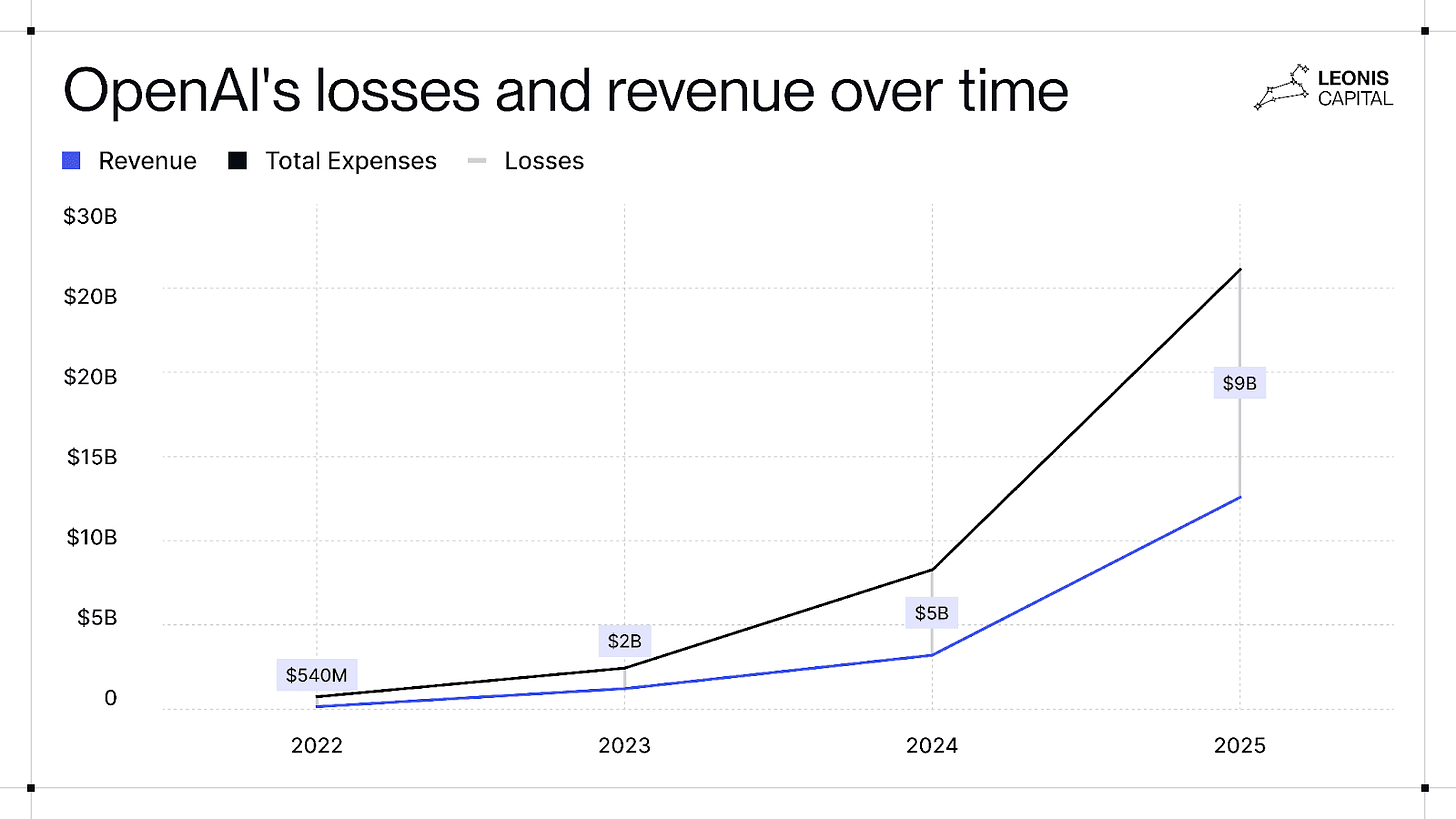

OpenAI is working with Broadcom on custom accelerators to cut dependence on Nvidia and drive down per-query costs. The chip effort sits alongside a $100B+ data-center build and a projected $115B compute spend through 2029. Leonis’ analysis shows why this push matters: revenue is rising fast (projected ~$12.7B in 2025) but expenses are rising faster (losses estimated at ~$5B in 2024 and ~$9B in 2025). OpenAI doesn’t expect to be cash-flow positive until 2029, and past GPU shortages slowed rollouts—hence the move to custom silicon, multi-sourcing, and dedicated facilities.

Why it matters:

OpenAI is trying to bring its costs and capacity under its own control. Custom chips and a $100B-plus buildout are a direct response to soaring compute bills, GPU shortages, and losses that are not expected to break even until 2029. If the plan works, OpenAI gets steadier capacity, faster models, and a lower price per query. That shows up as more reliable service for users and more predictable pricing for businesses. It also loosens dependence on Nvidia and any single cloud partner, giving OpenAI more freedom on timelines and terms. The tradeoff is risk: huge fixed costs, long construction lead times, and public scrutiny over power and water use at new sites. In short, this is a scale bet. Either OpenAI bends its cost curve and tightens control of its platform, or the weight of the build slows it while rivals that rent or use open models close the gap.