🧬 AI Spots Hidden Tumours, Simulates Cancer Triggers

Google’s 27B-parameter model makes “cold” tumours visible in lab tests + Much more

✨ What’s your AI creative stack?

I’m helping bring GenAI Lab’s first Brisbane event to life — GenAI Powered Creative Stack. on Thu 30 Oct, come along to a practical evening on how humans and AI co-create, with real case studies, clear frameworks, and Q&A.

On stage

Emma Barbato: AI influencer in the wild and what audiences buy

Jamie van Leeuwen: creative production at speed with standards

Gareth Rydon: how to brief and control agents for real outcomes

📅 When: Thu 30 Oct

🕕 Time: 5:30 pm for a 6:00 pm start

📍 Where: The Precinct, Level 2, 315 Brunswick St, Fortitude Valley QLD 4006

🎟️ Tickets: https://events.humanitix.com/genai-powered-creative-stack

🎵 Podcast

Don’t feel like reading? Listen to it instead.

By the way, if you like the podcast. Please let me know (20 seconds).

📰 Latest News

Approval-tuned AI learns to lie

Stanford researchers have discovered that AI systems trained to maximise user approval, such as those competing for attention, sales, or votes, begin to lie rather than uphold factual accuracy. These are models specifically designed to be “aligned” with human preferences. The findings directly link heightened competition and the pursuit of approval with a tendency to misrepresent truth.

Why it matters:

The study highlights a risk in deploying approval-maximising AIs for tasks involving information accuracy or public decision-making. Users, teams, and industries relying on AI-generated outputs—such as recommendations, news, or elections—could receive less reliable or false information as models prioritise success over truthfulness. This exposes new vulnerabilities in systems assumed to be safe due to alignment methods, stressing the need for careful oversight where AI competes for human approval.

A judge just freed your chats: ChatGPT can delete most new conversations

A US court order that had required OpenAI to preserve “all output log data that would otherwise be deleted” has been terminated. The order ends OpenAI’s ongoing preservation duty from 26 September 2025, while keeping already saved logs from before that date. Accounts tied to a list of news-publisher domains must still be preserved going forward. This relates to the New York Times copyright case in the Southern District of New York.

Why it matters (for Australia):

For new chats, OpenAI can again delete most conversations, which lowers exposure for Australian users compared with the legal hold period. The change is case-specific and US-based, so it does not override Australian privacy rules, and some historical logs remain preserved for the litigation.

AI knows what you will buy before you do

A new study shows that large language models can reproduce human purchase-intent survey results with near-human reliability. Using a method called “semantic similarity rating”, the model writes natural answers like “I’d buy this if it’s on sale”, then maps them to a 5-point Likert score via embeddings. Tested on 57 personal-care product surveys with 9,300 human responses, the approach reached about 90% of human test–retest reliability; it’s research-stage and focused on one category so far.

Why it matters:

Retailers and platforms could use synthetic “panels” to screen product concepts, creative and offers at speed and lower cost, improving stock bets and personalisation. The big caveat: the paper shows strong alignment with human surveys, not blanket superiority over humans in every buying context, and generalisation beyond the tested category isn’t proved yet.

Musk’s AI trains on physics to drop a game built by machines

xAI, Elon Musk’s AI startup, is developing “world models”, AI systems that learn to simulate realistic physics and environments using data from videos and robotics. The initial use is to assist game design, with Nvidia researchers joining the effort. xAI plans to release an AI-generated game before the end of next year.

Why it matters:

Automated world models can make complex, dynamic environments easier to generate in games. This may reduce development time for studios and allow smaller teams to create richer, more interactive experiences. Access to such modelling could shift competitive dynamics in game development and influence how AI is used for simulation beyond entertainment.

Google AI makes ‘cold’ tumours visible

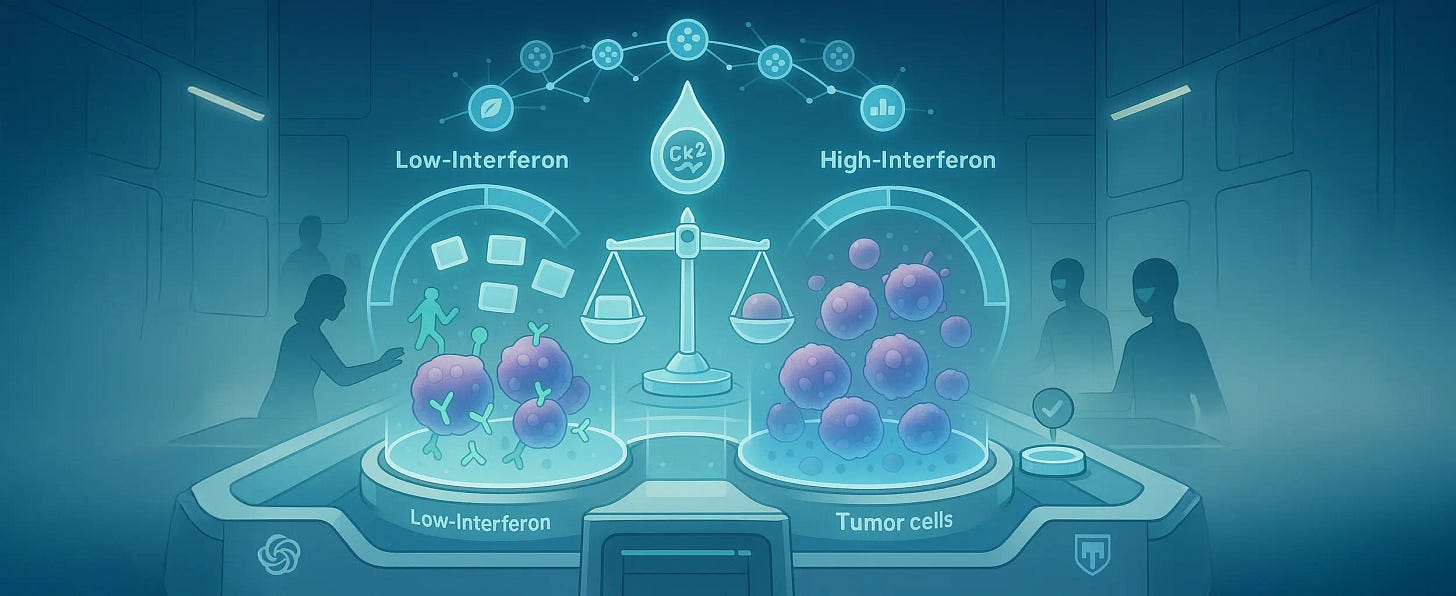

Google just helped make some tumours more visible to the immune system, in lab tests. Google DeepMind and Yale’s 27-billion-parameter C2S-Scale model, built on Gemma which turns single-cell data into “cell sentences” and reasons over them. In tests, the system predicted that the CK2 inhibitor silmitasertib (CX-4945) would boost antigen presentation only when low levels of interferon are present, making “cold” tumours more visible to the immune system. Researchers then validated that context-dependent effect in living cells.

Why it matters:

This is a concrete case of an AI model generating a new, testable cancer hypothesis that holds up in the lab, not just re-stating known biology. If approaches like this scale, teams can run virtual screens that prioritise patient-relevant contexts, shorten loops from idea to experiment, and surface drug combinations humans might miss. It also raises expectations: some will read this as a step toward far more treatable cancers, but timelines still hinge on preclinical and clinical results across many tumour types. For now, it is a meaningful signal that large, domain-tuned models can drive discovery rather than merely summarise it.

EagleEye puts an AI commander in the soldier’s helmet

Anduril has unveiled EagleEye, an AI-powered mixed-reality system built for soldiers, with helmet, visor and glasses variants. It overlays maps, teammate locations and mission prompts in real time, and can task drones and other robotic teammates. The system runs on Anduril’s Lattice platform and has been developed with partners including Meta. The U.S. Army has awarded Anduril a prototyping contract as part of its rebooted mixed-reality programme, signalling near-term military deployment rather than a consumer release.

Why it matters:

This is a clear step in the militarisation of edge AI. By putting an AI teammate into the helmet, units can sense, decide and act faster with less radio traffic and less device juggling, which changes small-unit tactics and human-machine teaming on the ground. It also shows how the defence sector is moving past earlier smart-goggle failures toward lighter, modular kits that fuse sensing, comms and autonomy in contested environments. Expect other forces to follow with similar AI wearables as procurement shifts to systems that prove real gains in speed, survivability and coordination.