📉 AI Bubble Trouble?, 🕵️ Spy Agency Raises Alarm, 👁️ Teaching AI to See Like Us

Slowing chip revenue, foreign influence campaigns, and vision models that finally get the concept.

🎵 Podcast

Don’t feel like reading? Listen to it instead.

By the way, if you like the podcast. Please let me know (20 seconds).

📰 Latest News

OpenAI’s GPT-5.1: Less small talk, more thinking where it counts

GPT-5.1 is a mid-cycle upgrade to the GPT-5 family that changes how the model spends its effort rather than introducing an entirely new capability tier. The new Instant model is tuned to be chattier by default and better at following tight instructions, while the Thinking variant now adjusts its “thinking time” to the difficulty of the task, producing shorter answers on easy prompts and up to about 70 per cent more internal reasoning on the hardest ones. OpenAI is also leaning into personalisation: users can now pick built-in styles such as Professional, Friendly or Quirky, or tune traits like conciseness and warmth so that the same underlying model presents very differently in ChatGPT.

Why it matters:

For everyday users, the headline change is feel, not frontier IQ. Simple queries should resolve faster and with fewer off-topic digressions, while difficult work such as multi-step analysis or coding gets more computation without the user having to think about settings. That is useful if you rely on ChatGPT as a work tool: fewer trivial replies padded out for length, more persistence on genuinely hard problems. The expanded tone controls matter in a different way. They formalise what people were already doing by prompt hacking and make it easier for teams to standardise a “house voice” across support, drafting and ideation. For developers and power users, the subtext is that OpenAI is shifting from big, infrequent jumps to more regular tuning of behaviour on top of the same core models.

ASIO sounds the alarm: AI is supercharging propaganda and polarisation

Australia’s spy chief warns that AI is becoming a force-multiplier for foreign influence. In a speech last week, ASIO Director-General Mike Burgess said AI can push radicalisation and disinformation “to entirely new levels”, citing pro-Russian influencer links and campaigns that inflame domestic tensions. At the same time, investigators in Europe detail a Russian “Pravda” content network that mass-republishes propaganda across languages and seeps into Wikipedia citations and chatbot responses.

Why it matters:

This is not just about single falsehoods. It is about shaping what AI retrieves and repeats. When adversaries flood the web with coordinated stories, models trained to reflect the information environment start to surface those frames, making influence scalable and personalised. For Australia, the immediate risks are social cohesion, election integrity and community safety; the medium-term risks are degraded knowledge systems if AI tools normalise tainted sources.

AI is hollowing the middle of the job market: Analysis of 180 million job ads

A scan of 180 million job ads from 2023–2025 shows AI is hitting some roles hard while leaving others mostly intact. Overall postings are down about 8 per cent, but computer graphics artists are down 33 per cent, photographers and writers about 28 per cent, with journalists and PR roles also sliding. Creative execution jobs are shrinking; creative direction and product design are roughly holding the line. Compliance and sustainability roles are getting hammered too, with specialists and chiefs in both areas down 25–35 per cent as some firms row back ESG and regulatory spend. On the upside, machine learning engineer is the fastest-growing title, up about 40 per cent this year after a big jump in 2024, with data, robotics and data-centre roles also rising. Senior leaders (directors, VPs, C-suite) are barely down at all, while individual-contributor roles fall the most. Software engineering, data analysts, customer service and most sales jobs are basically stable, with only pockets of decline such as some front-end and sales-ops roles.

Why it matters

AI is not flattening the whole labour market; it is hollowing out specific layers. Routine, promptable work such as asset creation, generic copy, basic photography and structured note-taking is being automated first, while jobs that mix judgment, relationships and domain context are proving resilient. At the same time, AI tools are giving senior leaders more leverage, letting a smaller layer of decision-makers direct more work without as many people underneath them. For workers, the message is blunt: move up the value chain or move closer to the machines. Strategy beats execution, owning the client beats just pushing pixels, and knowing how to direct AI beats competing with it head-on. If reskilling does not keep pace, expect a wider gap between protected, high-leverage roles and a growing pool of displaced mid-skill talent.

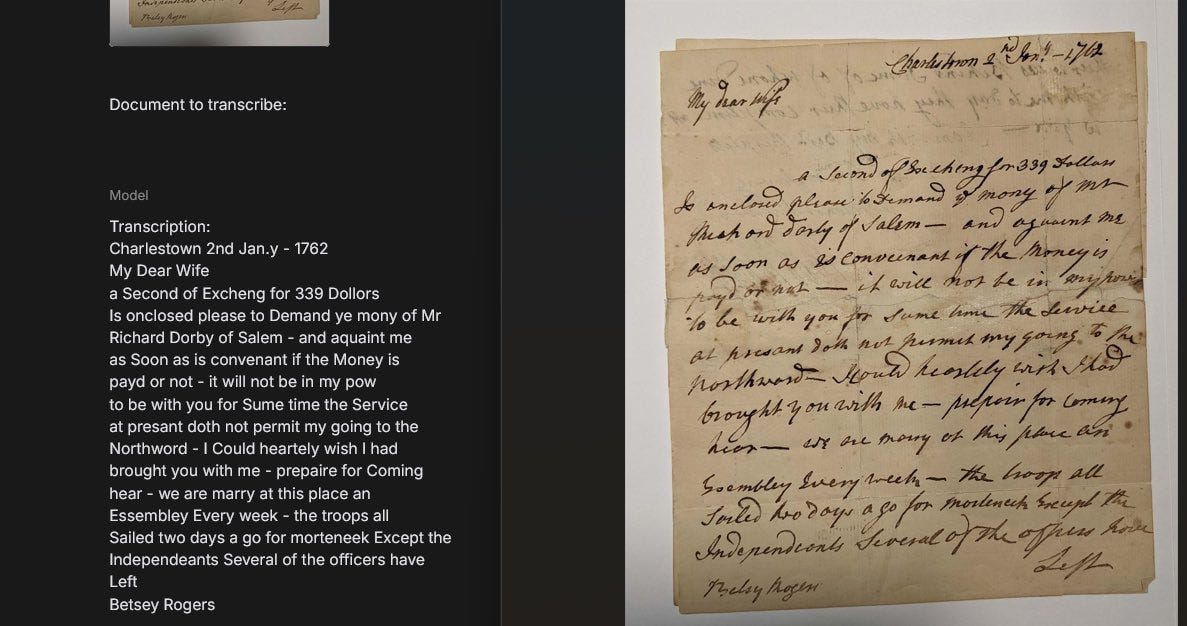

Expert-level palaeography on tap: messy ledgers turned into searchable history

A new, unannounced Google model seen in AI Studio tests is reading messy historical handwriting at expert level and, crucially, reasoning through context. In one ledger, it treated a cryptic “145” as weight, converted shillings and pence to a common unit, reconciled the non-decimal total, and inferred 14 lb 5 oz, folding perception and domain knowledge into a single pass. The upshot is letting historians process far more letters, ledgers and marginalia, including material once too minor to justify weeks of expert time, and shifting the bottleneck from deciphering to interpreting.

Why it matters:

When perception and context arrive together, the work changes. Reliable transcription plus on-the-fly inference turns vast runs of letters, ledgers and marginalia into clean, searchable material. That scale lets historians build timelines in days, follow people and goods across regions, and recover overlooked voices that only emerge when you read widely rather than selectively. The result is not just faster throughput but new kinds of questions: how influences spread, where power and money moved, which events were truly connected.

🌐 More from Generative History

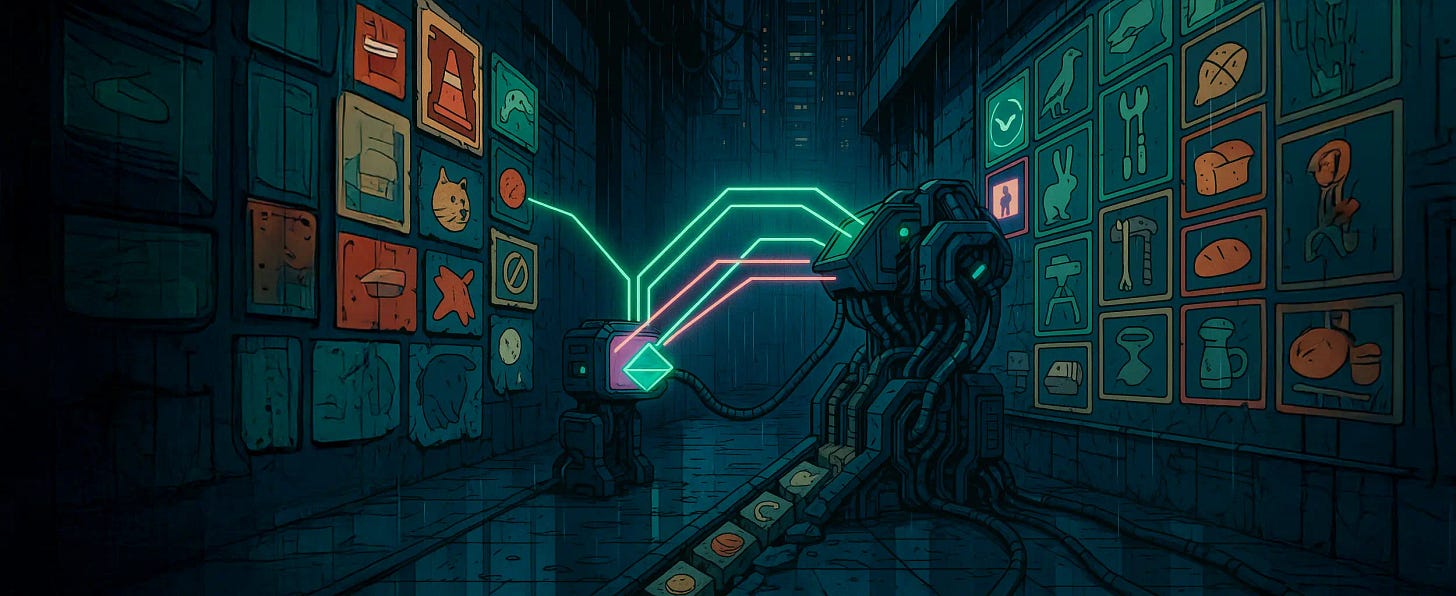

Teaching AI to see like humans

Current vision models often get the “right” answer for the wrong reason, latching onto colours and textures instead of concepts. Change the background and a model that knows a hundred dog breeds may suddenly group a dog with a traffic cone. DeepMind’s new Nature paper tackles that directly: it measures how models differ from humans on a simple “odd one out” test, then actively rewires their internal representation so they cluster images the way people do.

The team trains a small adapter on human judgements (the THINGS dataset), uses that “teacher” to generate millions of synthetic odd-one-out examples (AligNet), and then fine-tunes student models on this signal. After alignment, the model’s internal map stops being a jumble of objects and instead forms human-like hierarchies: animals with animals, tools with tools, food with food. Those aligned models both agree with people more often and perform better on standard benchmarks like few-shot learning and robustness to distribution shift.

Why it matters

Most real-world tasks need concept-level vision, not just pixel matching. A system that groups a car with a plane because both are large metal vehicles, or separates a cat from a starfish despite similar colours, behaves more like a partner with common sense than a brittle texture detector. DeepMind’s method shows you can push general-purpose vision models toward that human structure without wrecking their existing skills, and even improve their generalisation along the way. That is a concrete step toward multimodal systems that see, explain and fail in ways humans can anticipate, which is exactly what you want if you are putting AI into cars, factories, medical workflows or safety-critical agents.

Are we in an AI bubble?

AI infrastructure spending is starting to look stretched. TSMC, the key manufacturer behind Nvidia’s AI chips, just reported its slowest monthly revenue growth in 18 months, about 17 per cent in local currency, even as Big Tech plans more than 400 billion dollars in AI capex next year. At the same time, “Big Short” investor Michael Burry is accusing hyperscalers of juicing earnings by extending the useful life of their AI servers, which would understate roughly 176 billion dollars in depreciation over three years. SoftBank has quietly cashed out nearly 9 billion dollars of Nvidia stock to fund new AI bets, while OpenAI is talking to Washington about loan guarantees for chip plants and lining up 1.4 trillion dollars in infrastructure commitments despite heavy losses.

Why it matters

The money going into AI is real, but the cash coming back is still mostly hypothetical. Slowing growth at the industry’s key supplier, aggressive accounting at the hyperscalers, a major backer taking profits in Nvidia, and OpenAI floating government-supported financing are all classic late-cycle signals. None of this proves the AI build-out is a bubble, yet it does suggest the risk has shifted from “will AI be big” to “will these investments ever earn their keep.” If revenue and genuine productivity gains do not catch up with the trillions in planned spend, the reckoning will hit earnings, not just lofty share prices.

🌐 More from Bloomberg (paywall)

Wow, thinking variant. More internal reasoning… how so?