🧠 Musk’s Grokipedia Launches, 🧾 AI Fakes Expense Reports, 🏗️ OpenAI Rewires

xAI challenges Wikipedia with self-fact-checked pages, AI receipts top $1m in fraud, and OpenAI formalises its PBC shift.

✨ Last Chance: Tonight at The Precinct

I’m helping bring GenAI Lab’s first Brisbane event to life — GenAI Powered Creative Stack. on Thu 30 Oct, come along to a practical evening on how humans and AI co-create, with real case studies, clear frameworks, and Q&A.

On stage

Emma Barbato: AI influencer in the wild and what audiences buy

Jamie van Leeuwen: creative production at speed with standards

Gareth Rydon: how to brief and control agents for real outcomes

📅 When: Thu 30 Oct

🕕 Time: 5:30 pm for a 6:00 pm start

📍 Where: The Precinct, Level 2, 315 Brunswick St, Fortitude Valley QLD 4006

🎟️ Tickets: https://events.humanitix.com/genai-powered-creative-stack

🎵 Podcast

Don’t feel like reading? Listen to it instead.

By the way, if you like the podcast. Please let me know (20 seconds).

📰 Latest News

OpenAI Rewrites Its Charter: PBC governance with an independent AGI check

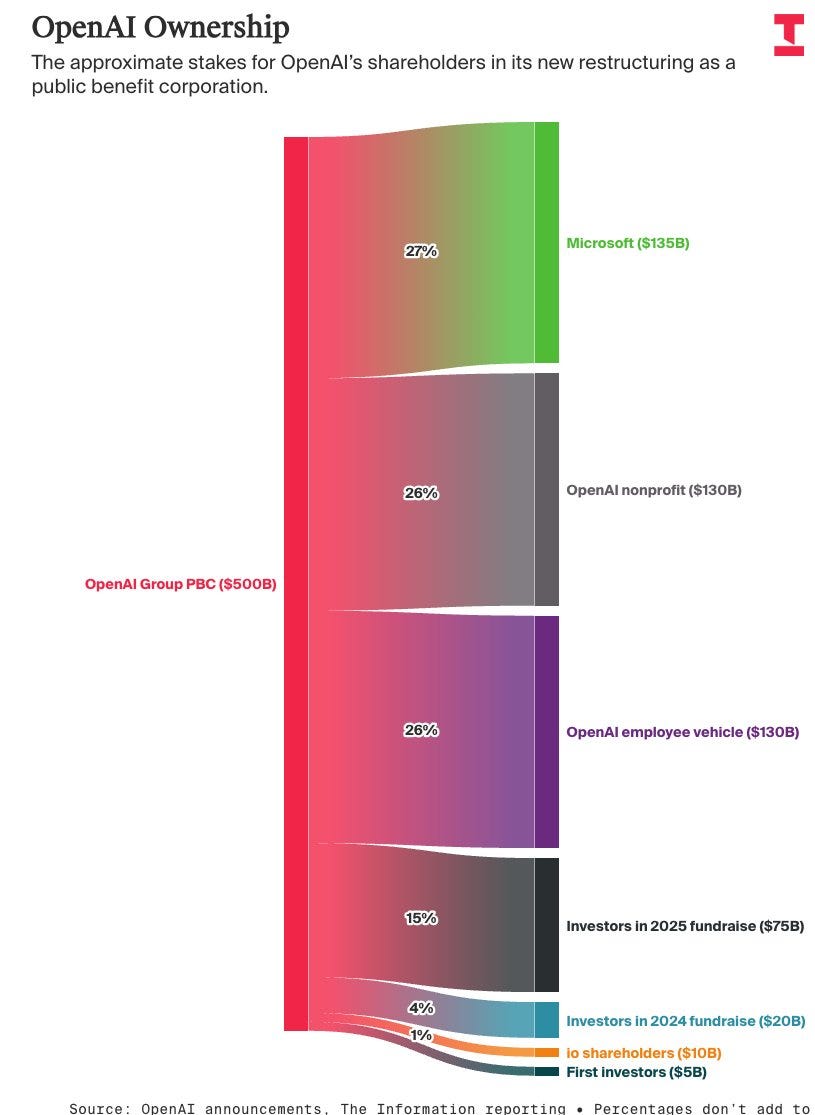

OpenAI has completed its long-planned conversion into OpenAI Group PBC, a public benefit corporation controlled by the OpenAI Foundation, and signed a definitive agreement with Microsoft that resets the economics and guardrails of their partnership. Microsoft now holds about 27% of OpenAI’s for-profit arm, a stake valued around US$135 billion in a deal that places OpenAI near US$500 billion overall. The agreement adds an independent expert panel to verify any future “AGI” declaration, extends Microsoft’s commercial rights to OpenAI models and products through 2032, and includes an additional US$250 billion Azure purchase commitment from OpenAI. Regulators in Delaware and California had scrutinised the move; the restructuring proceeds after that review.

What actually changed:

The new pact preserves OpenAI as Microsoft’s frontier-model partner while giving both sides more room: Microsoft retains broad product and model IP rights even if OpenAI later claims AGI, and OpenAI gains flexibility to raise capital and operate under a PBC charter that bakes public-interest duties into corporate decision-making. The AGI call must be externally validated rather than declared unilaterally, addressing a long-standing ambiguity in the prior setup.

Why it matters:

This is a structural reset for the AI era. By moving to a PBC, OpenAI’s directors must weigh stated public-benefit objectives alongside shareholder value, which will shape choices on safety releases, data use, and access. The Microsoft terms stabilise the go-to-market engine—rights clarity to 2032 and mammoth Azure spend—while loosening exclusivities enough for both parties to pursue their own long-range bets. Net effect: clearer governance, a thicker capital pipeline, and a partnership designed to survive an AGI milestone rather than be blown up by it.

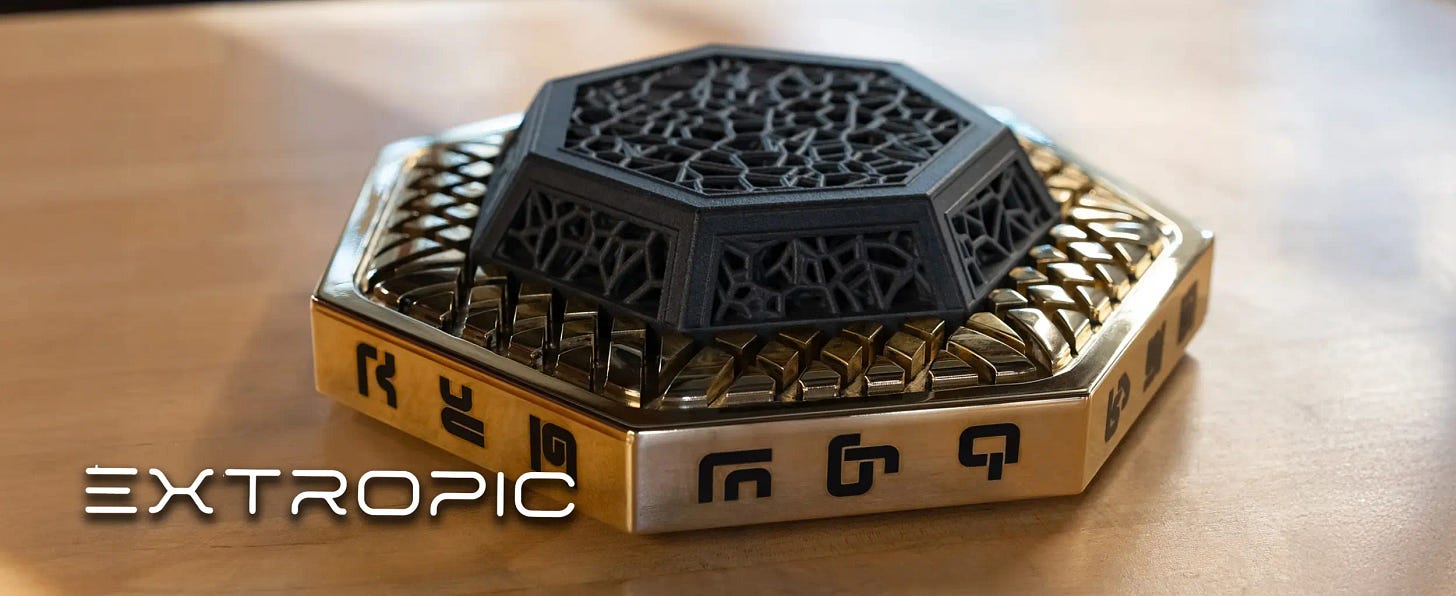

Heat Beats Maths: Extropic’s Sampler Claims 100,000× Efficiency

Extropic has unveiled a new class of machine built for sampling rather than matrix maths. The system centres on Thermodynamic Sampling Units (TSUs), a development board called XTR-0, and a fresh generative algorithm dubbed the Denoising Thermodynamic Model. In early tests on small benchmarks, Extropic claims energy efficiency gains of up to 100,000× compared with today’s deep-learning stacks on GPUs. The toolkit ships with a Python simulator so researchers can code against the architecture now, ahead of larger chips.

Why it matters:

AI is hitting a power wall. Data centres are struggling to source megawatts, while serving bigger models to more people pushes energy costs up faster than revenue. Extropic’s pitch is simple: increase AI per joule. If a dedicated sampler can do the probabilistic parts of generative AI with two, three or four orders of magnitude less energy, inference becomes cheaper, cooler and easier to deploy at the edge. It also broadens the design space for hybrid systems where GPUs handle dense linear algebra and TSUs take over the sampling loop.

How it works, in plain English:

Modern generative models are, at heart, controlled dice throws. You compute a set of probabilities, then you sample from them to decide the next token or the next pixel. GPUs compute those probabilities with vast numbers of multiplications, then call a software random number generator. TSUs flip that around. They make the randomness the main event.

Inside a TSU are huge grids of tiny circuits called p-bits. Each p-bit is like a coin that constantly jitters between heads and tails because of the natural thermal noise already present in the transistors. That noise is not a bug. It is the fuel. By nudging each coin with small electrical biases, and by letting neighbouring coins influence one another, the whole grid starts to settle into patterns that reflect the probability distribution you asked for. Think of it as shaping a landscape of valleys and letting a swarm of particles roll around. Where they spend more time corresponds to the outcomes you should sample more often.

Because the computation happens locally, with short hops between nearby circuits, the chip avoids the expensive long-distance data shuffling that burns energy in GPUs. The result is a physical sampler that can churn out high-quality samples while sipping power, which is why the efficiency headline is so large.

Grokipedia Launches: An AI Encyclopaedia That Writes and Checks Itself

xAI has launched Grokipedia, an AI-generated encyclopedia Musk pitches as a “more truthful” alternative to Wikipedia. The site went live this week with roughly 885,000 entries; content is written and “fact-checked” by xAI’s Grok model rather than human editors. Early reports note outages at launch and allege that some pages adapt or mirror Wikipedia text under Creative Commons terms. Unlike Wikipedia, users can’t directly edit articles but can flag errors. Musk frames the project as correcting perceived bias and advancing xAI’s goal of “understanding the universe.”

Why it matters:

If Grokipedia gains traction, it tests whether an AI-first reference work can keep pace with fast-moving topics without the transparency and community oversight that underpin Wikipedia’s trust. It also sharpens a live debate about provenance: critics are already probing ideological tilt and verbatim reuse, while Wikipedia’s stewards argue that human, volunteer governance remains a core advantage. For media, search and education, the outcome influences how “authoritative” knowledge is compiled, audited and cited in an era when AI both consumes and produces the web’s source material.

AI Receipts Surge: 14 per cent of Fraud and >US$1m Flagged in 90 Days

Expense-audit platforms report a sharp rise in AI-forged receipts, with AppZen saying 14 percent of the fraudulent documents it caught in September were AI-generated, up from none last year. Fintech firm Ramp separately reported flagging more than US$1 million in suspect invoices within 90 days. These fakes are photorealistic—complete with textures, itemised lines and plausible tax—and are trivial to produce with modern image models, making human “eyeball checks” unreliable.

Why it matters:

Traditional expense controls assume a real receipt exists and can be verified visually; that premise no longer holds. Finance teams will need to move from image inspection to evidence triangulation: card-issuer data, merchant APIs, booking IDs, GPS and time stamps, plus AI that scores inconsistencies across the whole claim. Expect tighter policies (mandatory e-receipts, direct-from-merchant feeds), slower reimbursements during the transition, and more targeted audits as companies balance loss prevention with employee experience. The immediate takeaway is simple: do not trust the picture—trust the data behind it.

Nvidia Everywhere: 100k-GPU Labs, Robotaxis, and 6G Deals

Nvidia used its Washington, DC keynote on 28 October to stake out the next phase of AI infrastructure. The company and the US Department of Energy announced seven new AI supercomputers for Argonne, anchored by Solstice with 100,000 Blackwell GPUs and Equinox with 10,000 in 2026, for a combined 2,200 exaflops of AI performance. In industry, Eli Lilly is building what it calls pharma’s largest AI “factory” on >1,000 Blackwell Ultra GPUs; Uber will use Nvidia’s DRIVE platform to scale a robotaxi network that partners say could reach 100,000 vehicles; and Nokia secured a US$1 billion Nvidia investment tied to an AI-RAN and 6G collaboration. Nvidia also unveiled NVQLink, a quantum–GPU interconnect aimed at hybrid scientific workloads, and highlighted new enterprise ties including Palantir for operational AI.

Why it matters:

This is Nvidia turning the GPU into a national asset and a vertical stack for enterprise. The DOE fleet gives US science sovereign-scale compute for model training and agentic workloads. Anchor customers in pharma, telecoms and mobility justify the capital and pull the ecosystem toward tightly integrated hardware, networking and software where Nvidia writes the playbook. Quantum links are a hedge on the next wave of physics-heavy computing. For buyers, the centre of gravity keeps moving from piecemeal kits to full-stack “AI factories”, with clear speed and cost benefits but greater dependence on Nvidia’s roadmap. For rivals, the bar to compete rises again, from chips to interconnects to field-proven deployments.