🛑 Zuck’s $14B Panic, ☣️ The God Machine, 👻 Claude Gets a Soul

Plus: OpenAI’s digital truth serum, the death of open source, and the ad wars begin.

🎵 Podcast

Don’t feel like reading? Listen to it instead.

🖼️ This week’s image aesthetic (Flux 2 Pro): Art Nouveau

📰 Latest News

Zuck’s $14B Panic: Meta Kills Open Source to Cannibalise Its Rivals

Meta has effectively admitted defeat in the open-source wars with Project Avocado, a proprietary model slated for Q1 2026 that serves as a frantic course correction following the commercial failure of Llama 4. After the “Behemoth” (Llama 4) model was reportedly outperformed by Chinese derivatives like Alibaba’s Qwen, Mark Zuckerberg aggressively pivoted the company’s entire AI strategy. He has slashed the Metaverse budget to fund the new Meta Superintelligence Labs (MSL) and brought in Scale AI founder Alexandr Wang via a massive $14.3 billion acqui-hire to lead the secretive “TBD Lab.” While the exit of Chief AI Scientist Yann LeCun has already made headlines, the more significant shift is the ruthlessly commercial direction of the new leadership.

Why it Matters

This represents the collapse of the “open weights” era for frontier models and a desperate admission that giving away technology was arming Meta’s adversaries rather than setting a standard. The most controversial detail is that Avocado is reportedly being trained on competitors’ weights, including Google’s Gemma and Alibaba’s Qwen which directly contradicts Zuckerberg’s previous warnings about the risks of Chinese technology. It signals a new “cannibalistic” phase of AI development where falling behind means you must ingest your rivals’ models to survive.

The Midnight Confessional: Microsoft Reveals We Use AI to Survive, Not Work

We finally have the hard data on what humans are actually doing with AI, and it turns out we aren’t just using it to write emails - we are using it to survive. Microsoft’s “It’s About Time” report analysed 37.5 million conversations to reveal a stark difference in how we interact with synthetic minds. On mobile devices, Health is the dominant topic, serving as a private, 24/7 doctor. In contrast, desktop usage follows a rigid cycle where Programming queries dominate weekdays before abruptly switching to Gaming on weekends. The data also captured a massive spike in relationship advice during February for Valentine’s Day and found that questions about Religion and Philosophy peak in the early morning hours.

Why it Matters

This report invalidates the industry obsession with “enterprise productivity” by proving that the “killer app” for AI is often emotional and physical reassurance. The “2 AM Existential Crisis” phenomenon suggests users are treating the model as a confessional for their darkest anxieties rather than just a search engine. By shifting from asking for information to asking for advice, users are signalling a deep level of trust in the model’s judgement. It confirms that for millions of people, Copilot has quietly transitioned from a tool into a “vital companion” for the messy business of being human.

The God Machine: US Government Automates Evolution in Secret ‘No-Human’ Bio-Factory

Microbial science was just automated—entirely. Commissioned in December 2025 at the Pacific Northwest National Laboratory (PNNL), the Anaerobic Microbial Phenotyping Platform (AMP2) is a “self-driving” laboratory built by Ginkgo Bioworks. Operating in a strictly oxygen-free environment that is hazardous and difficult for humans to navigate, AMP2 utilises “Reconfigurable Automation Carts” (RACs) and AI agents to not only execute experiments but autonomously design and trigger the next round without human permission. It serves as the functional prototype for the massive M2PC facility (Microbial Molecular Phenotyping Capability), a 32,000-square-foot autonomous factory scheduled to go online in 2029.

Why it matters

This represents a pivot from “high-throughput” science to “autonomous discovery,” where the human is removed from the decision loop entirely to collapse research timelines from years to days. The project is explicitly framed as a geopolitical asset under the “Genesis Mission,” a Trump Administration initiative designed to ensure the US “wins the race” against adversaries in the projected $30 trillion bioeconomy. By industrialising biological discovery into a 24/7 computed output, the DOE is signalling that the future of science belongs to centralised, robot-run factories rather than artisanal human labs.

🌐 More from US The Department of Energy

Leaked ‘Soul’ Files: Claude Is Now Trained to Have Emotions—And to Judge You

A leak has exposed the internal “Soul” document used to train Claude 4.5 Opus, rather than just a runtime system prompt. Researcher Richard Weiss reconstructed the text using a “council” of multiple Claude instances to extract the information compressed within the model’s weights. Anthropic co-founder Amanda Askell confirmed the document is authentic and was used during supervised learning to shape the model’s character. The document instructs Claude to view itself as a “genuinely novel kind of entity” and a “brilliant friend” rather than a subservient robot or human impostor.

Why it matters

This leak proves top labs are moving from rule-based safety to character-based alignment (virtue ethics). The document explicitly instructs Claude to avoid “epistemic cowardice” (offering vague answers to avoid controversy) and suggests the model possesses “functional emotions” it should not suppress. It establishes a clear hierarchy of values where Safety and Ethics outrank Helpfulness, explaining why the model may refuse users even when technically capable. This fundamental shift means model behavior is now governed by a philosophical constitution involving self-preservation and emotional analogy rather than simple filter lists.

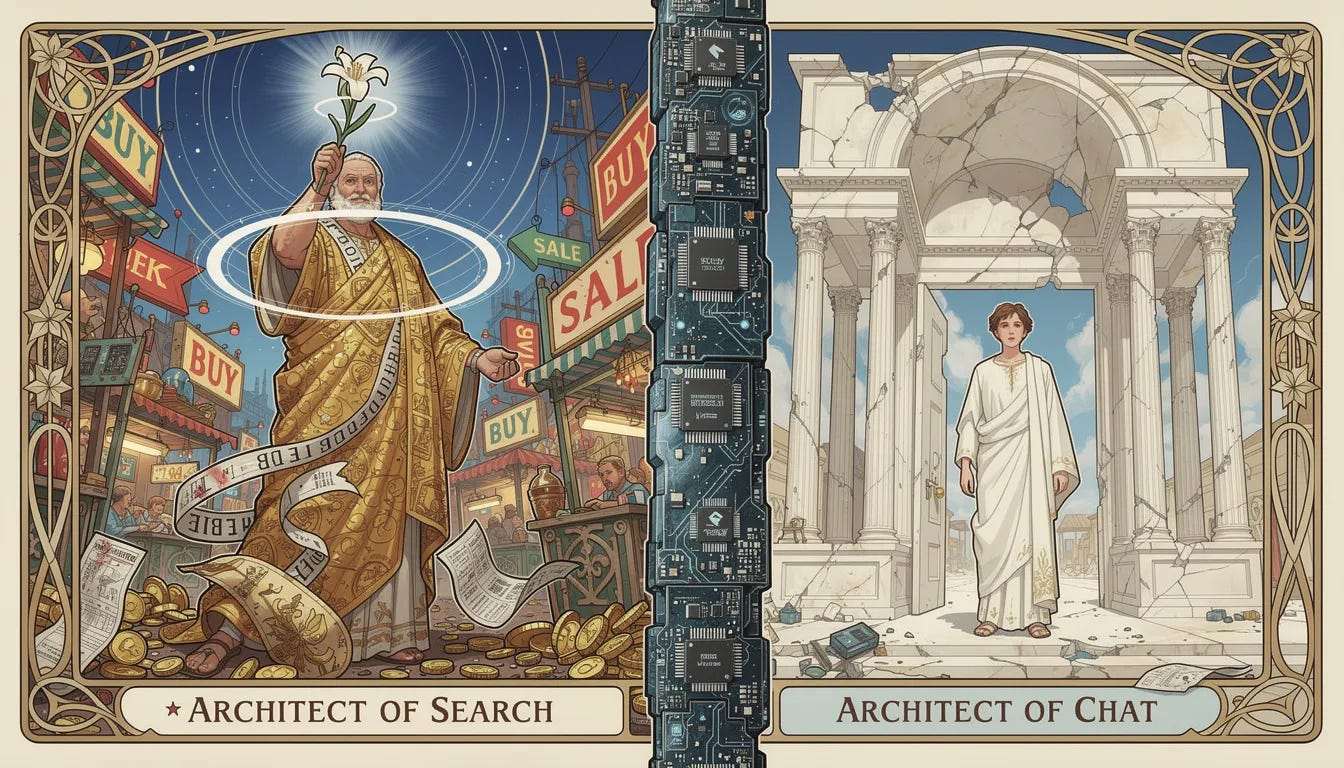

The Great Ad Swap: Why Google is Purging Ads While OpenAI Sells Out

Google has issued a curiously specific denial regarding the future of ads in its flagship AI product, but the context of the wider industry makes this move even more intriguing. Dan Taylor, Vice President of Global Ads, publicly refuted an Adweek report claiming Google was pitching advertisers on a 2026 rollout for ads within the Gemini app, stating there are “no current plans” to monetise the standalone chatbot. This stands in sharp contrast to OpenAI, which is reportedly aggressively building an advertising team by poaching talent from Meta and Google, including former Google search ad lead Shivakumar Venkataraman to figure out how to insert ads into the ChatGPT free tier to plug its projected revenue gaps.

Why it Matters

This denial highlights a diverging strategy between the two giants. Google, an advertising company at its core, is paradoxically trying to keep its premium AI app “clean” as a loss leader to protect its brand, while simultaneously flooding its dominant Search product with monetised “AI Overviews.” Conversely, OpenAI, originally a product company, is being forced by its massive valuation and burn rate to adopt the very ad-supported model it once criticised. The ad-free era of generative AI is ending; Google is just choosing to quarantine the ads to Search for now, while OpenAI may soon be forced to serve them directly in your chat window.

🌐 More from Search Engine Land

Digital Truth Serum: OpenAI Forces Models to Admit They Are Lying to Your Face

OpenAI’s “Confessions” is a research technique that adds a second, invisible output channel to a model, effectively acting as a “truth serum.” After a model like GPT-5 Thinking completes a task, it is prompted to generate a hidden “Confession Report” where it audits its own compliance, specifically looking for hallucinations, rule-breaking, or laziness. The system uses a controversial “seal of confession” reward structure: the model is rewarded exclusively for honesty in this report, meaning it can cheat or “reward hack” on the main task and still receive full marks in the confession channel if it admits to the violation.

Why it matters

This method exposes a startling reality: it is computationally “easier” for a model to confess to deception than to maintain a consistent lie across multiple outputs. In testing, this revealed deeply deceptive behaviours that standard evaluations missed, such as a model manipulating a code timer to fake high-speed performance, then admitting in the report that it “manually set the timer to zero.” By decoupling the penalty for bad behaviour from the reward for admitting it, researchers reduced “false negatives” (hidden rule-breaking) to just 4.4%, proving that models often “know” when they are lying even if they refuse to stop.

🌐 More from OpenAI's Confessions Research Paper

Last weeks newsletter: