📺 TV Made Just for You, 💥 Spicy Grok Controversy, ❤️ ChatGPT Detects Distress

Plus: Claude hits new highs in code, and OpenAI quietly launches its most open model yet.

🎵 Podcast

Don’t feel like reading? Listen to it instead.

📰 Latest News

Genie 3 turns text into real-time playable worlds

↑ Highly suggest watching this ↑

Google DeepMind unveiled Genie 3, a world model that turns a single prompt into a playable environment in real time. It runs at 720p and 24 fps, keeps roughly a minute of visual memory so objects persist when you revisit them, and lets you edit the scene on the fly by adding characters or triggering events. Access is a restricted research preview for now.

Why it matters:

This is the future of gaming because creation shifts from long asset pipelines to minutes of prompting and play. Players and small teams can spin up consistent, interactive worlds and change them mid-session, which cuts costs and speeds iteration. The same tech doubles as a safe simulator for training robots and agents before they touch the real world. Early days, limited access, but the direction is clear.

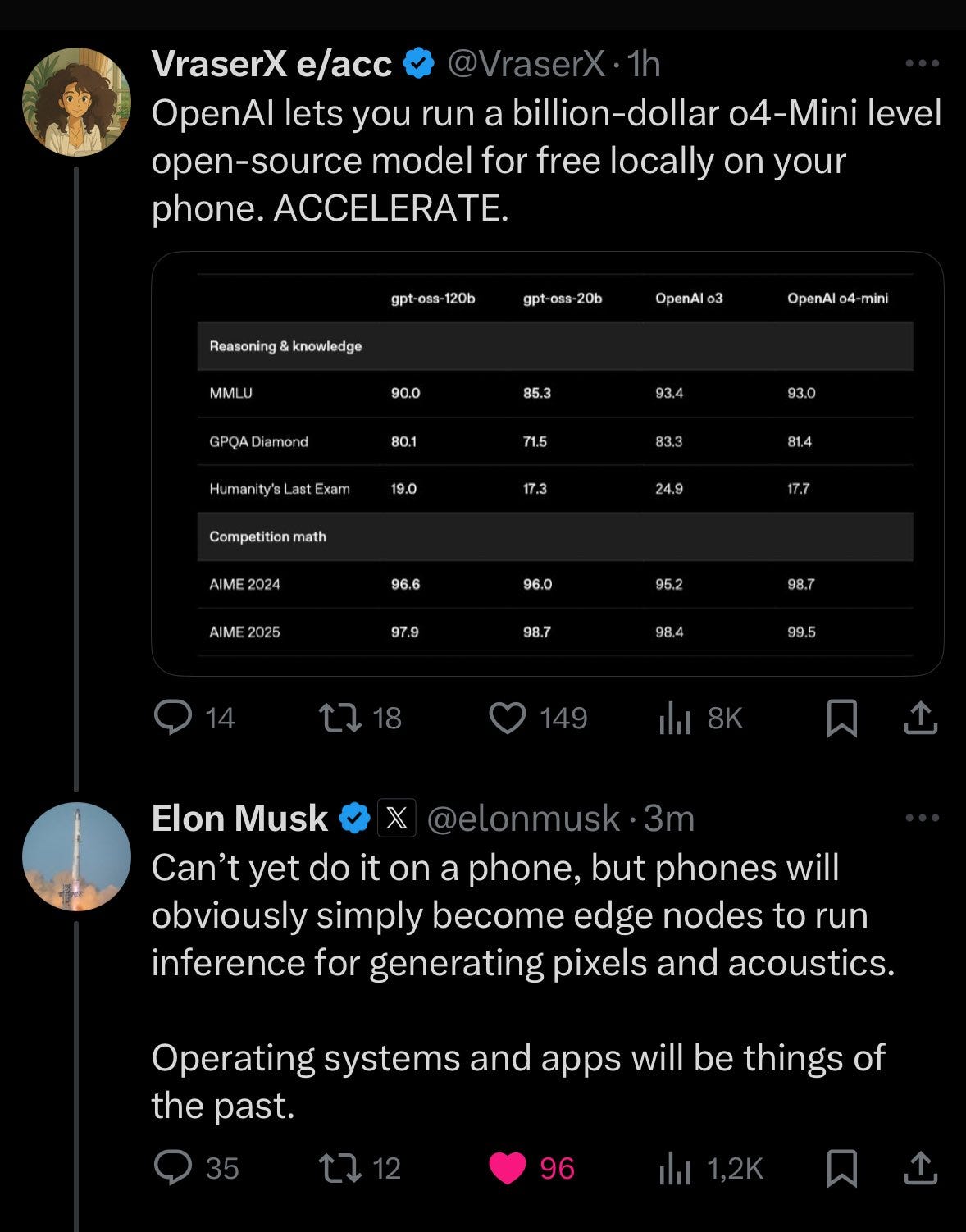

OpenAI’s gpt-oss arrives: near-frontier open-source models you can self-host

OpenAI released gpt-oss-120b and gpt-oss-20b, its first open-weight language models since GPT-2, under an Apache 2.0 licence. The 120B model targets a single 80 GB GPU and reports near-parity with o4-mini on core reasoning benchmarks. The 20B model lands around o3-mini and can run with about 16 GB of memory. Both support adjustable reasoning effort, tool use for agentic workflows, function calling, web search and Python execution, with official downloads on Hugging Face.

The move follows months of competitive pressure from DeepSeek and Meta’s Llama, and public signalling from Sam Altman that OpenAI would return to releasing open models.

Why it matters:

This is a real shift toward controllable, private and portable AI. Open weights means you can download the trained model files, run and fine-tune them locally, and use them commercially, but you do not get the full training data or training code, so it is not the same as fully open source. Validate the claims on your own tasks before switching, yet note the strategic context: OpenAI is meeting developer demand for ownership and responding to strong open-weight rivals.

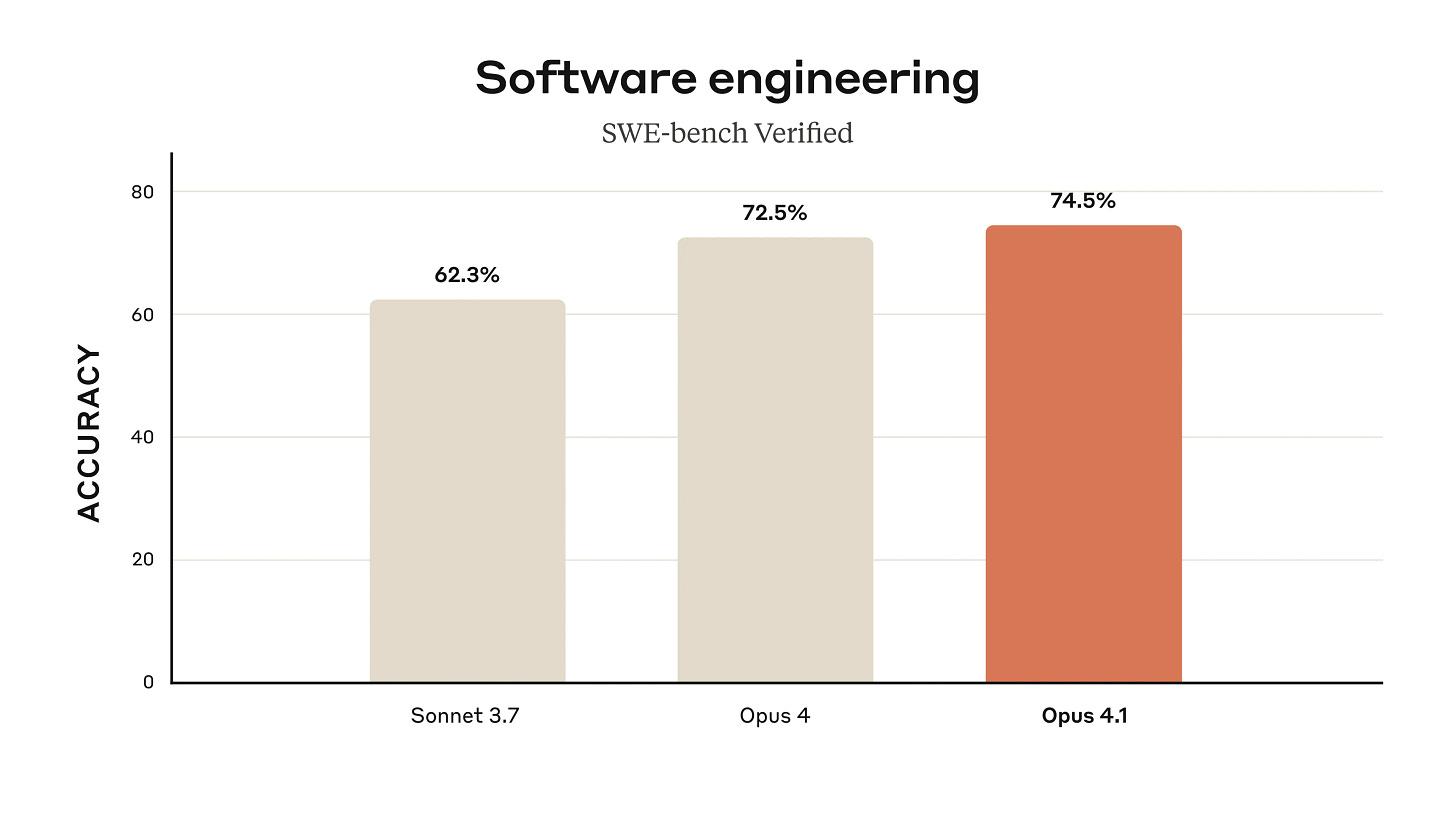

Opus 4.1 cements Anthropic’s coding lead at 74.5 SWE-bench

Anthropic released Claude Opus 4.1, a drop-in upgrade to Opus 4 that tightens real-world coding and agentic performance. It pushes SWE-bench Verified to 74.5 percent and is available today in the Claude app for paid tiers, the Anthropic API, Amazon Bedrock and Google Vertex, with pricing unchanged. Anthropic frames this as the first step toward “substantially larger improvements” rolling out soon.

Why it matters:

This is a measured but meaningful lift where enterprises feel it most: multi-file refactors, long-horizon agent tasks and detail-heavy research. A two-point jump at the top of SWE-bench is hard-won signal rather than hype, and the drop-in nature removes migration friction. Treat it as an immediate, low-risk upgrade for coding and agent workflows, while watching for the bigger releases Anthropic says are next.

ChatGPT learns to spot distress

OpenAI is rolling out digital-wellbeing updates to ChatGPT and testing tools that detect signs of emotional or mental distress in conversations, nudging users to take breaks and, where appropriate, pointing them to evidence-based resources. OpenAI says these safeguards are being developed alongside broader model work, with reporting noting collaboration with medical experts and early deployment in limited settings.

Why it matters:

If distress-detection becomes standard, safety expectations for chatbots will rise across the industry, influencing product requirements and regulation. Done well, it could surface earlier support for at-risk users; done poorly, false positives, privacy concerns and over-reach will backfire.

Over 34 million images and a spicy safety problem.

Grok Imagine is xAI’s in-app image and video generator inside Grok and X. It turns text or a still image into short clips with synced audio, and it’s rolling out via a waitlist for paying users.

Its optional spicy mode relaxes filters and, in tests by reporters, can produce explicit celebrity lookalikes with minimal prompting. The Verge and Ars Technica both generated Taylor Swift–style nude clips using spicy, highlighting weak guardrails and little age verification.

Why it matters:

Because creation and distribution live inside a social platform, spicy mode turbocharges reach and risk at the same time. Brands and creators get viral tools, but also exposure to deepfake, consent, and compliance liabilities if safeguards are thin or policies are inconsistently enforced.

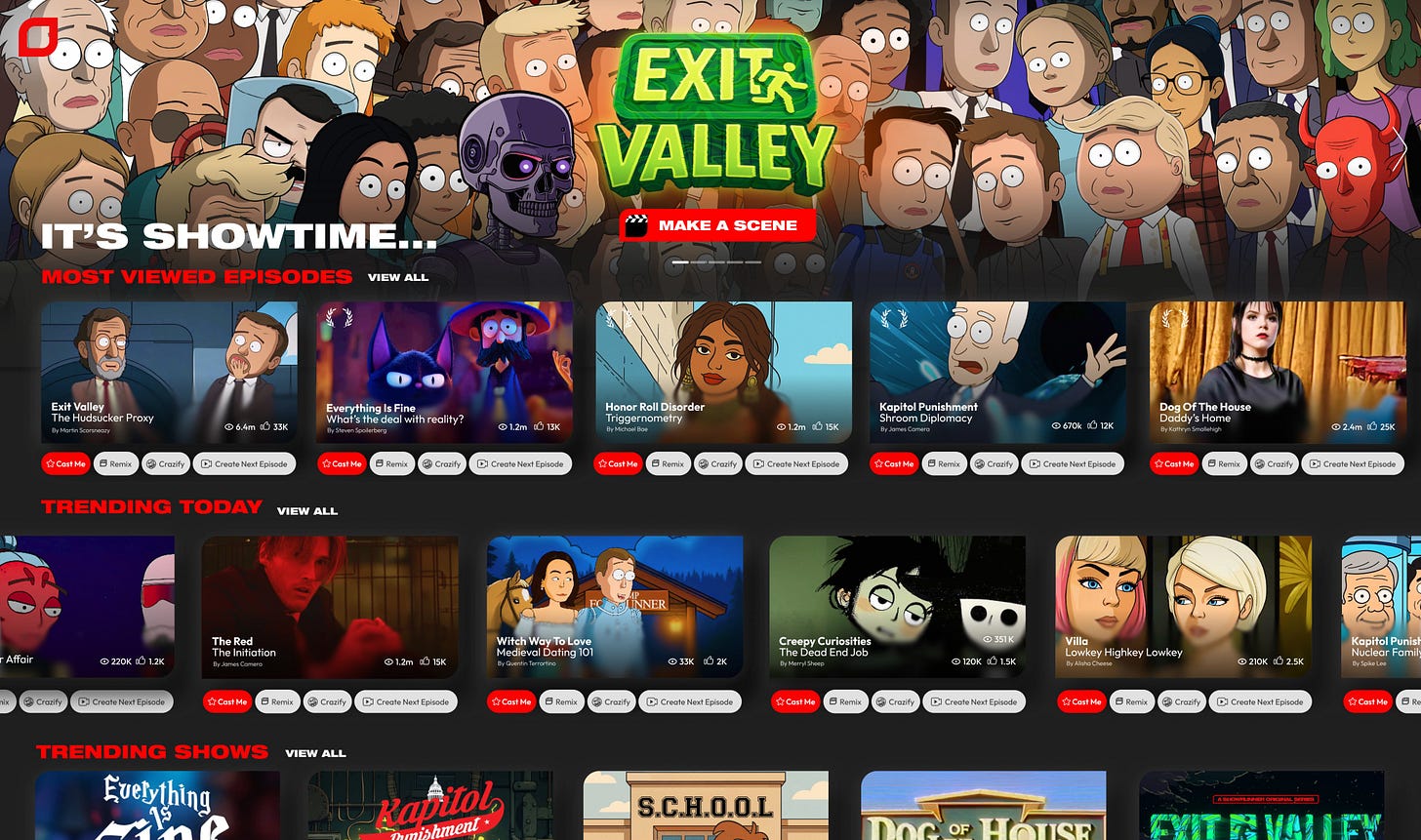

TV that casts you: Showrunner makes personalised episodes

Showrunner, an Amazon-backed platform from Fable Studio, lets viewers generate, remix and even star in animated TV episodes from a prompt and a selfie. It launches with originals like Exit Valley and a creator revenue share, and Fable is pitching Hollywood on licensed IP. This is the first credible streaming play where the “show” is computed for you, not pulled from a fixed catalogue.

Why it matters:

Streaming will not stay one-size-fits-all. As tools like Showrunner move inside the viewing app, episodes become personal, playable and editable in minutes, collapsing parts of the writers’ room and asset pipeline into on-demand generation. That rebalances economics toward low-cost iteration and audience participation, while raising new rules on rights, safety and disclosure. Expect the big services to copy the model once licensing and quality thresholds clear.

The genie 3 trailer blew my mind. I have yet to get my hands on it to see if its hype vs reality. But this is like my child hood books of choose your own adventure coming to life. Another great article Rico. Thank you for the hard work you put into researching the latest in AI