🎬 Sora 2 Takes On TikTok, 🧹 AI Workslop Exposed, 📚 Grokipedia Rewrites Truth

40% of workers have to fix bad AI output, 75M fake tracks pulled from Spotify, and OpenAI’s video feed stars… you.

🎵 Podcast

Don’t feel like reading? Listen to it instead.

By the way, if you like the podcast. Please let me know (20 seconds).

📰 Latest News

Sora 2, OpenAI’s competitor to TikTok

OpenAI has launched Sora 2, a social video app where every clip is AI-generated and can star you and your friends through a consent-based “cameo” system. The iOS app is invite only in the United States and Canada, pairs with the upgraded Sora 2 video model, and includes identity-verification so people can control when their likeness is used. OpenAI is pitching this not as a TikTok clone but as a creativity-first network with strong guardrails, and has publicised principles that prioritise long-term user wellbeing and meaningful controls over the feed.

Why it Matters:

This shifts AI video from a content treadmill to a friend-centred creation loop, which is more likely to feel personal, safer and sticky. Consent, verification and co-ownership controls aim to curb impersonation while still letting users remix each other, and the “principles first” posture signals an attempt to avoid the usual social-app harms before they harden into habits. For creators and brands it lowers the cost of making short video and changes distribution logic, since what spreads may be participatory memes with people you know rather than pure virality. The experiment is whether a social network built on AI-made clips can stay fun, useful and healthy once the novelty wears off.

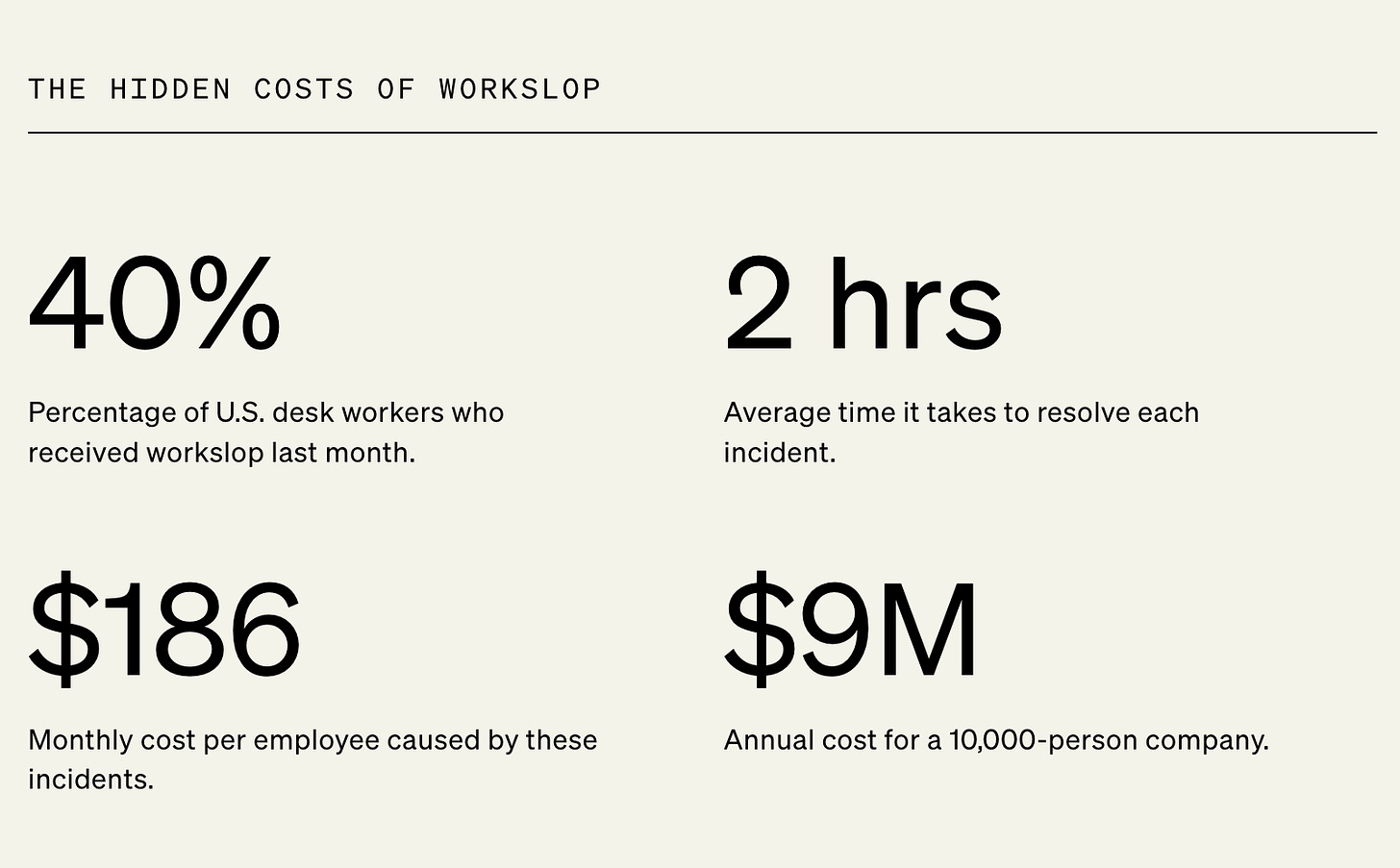

Workslop: The Hidden AI Plague Costing Millions

A Stanford Social Media Lab and BetterUp Labs study says AI “workslop” is quietly draining teams of time and money. In a September 2025 survey of 1,150 U.S. desk workers, 40 percent reported receiving polished-looking but low-substance AI output that took about two hours to fix per incident, translating to roughly 186 dollars per employee per month and about 9 million dollars a year for a 10,000-person company. The report frames workslop as a false signal of productivity that erodes trust and clogs workflows.

Why it Matters:

This is not a story about shiny tools but about operational drag. If organisations adopt AI without standards for quality, disclosure and review, they shift hidden work onto colleagues and dilute real performance gains. The fix is managerial, not magical: set clear use policies, train for prompt discipline and context, require human sign-off where stakes are high, and measure rework so leaders see the true cost. Done well, AI still boosts throughput and frees teams for higher-value tasks; done poorly, it becomes expensive busywork at scale.

Grokipedia Wants to Rewrite Facts with AI

Elon Musk is building Grokipedia, an AI-written encyclopaedia to rival Wikipedia, powered by xAI’s Grok models. Public reporting says the aim is to correct perceived bias and errors by having Grok review and rewrite source material, but there is no confirmed launch date or product details beyond early announcements. In short, it is an intent to replace volunteer moderation with AI-driven curation, not a released service.

Why it Matters:

If knowledge is compiled and rewritten by a model rather than by transparent, human-logged edits, the centre of gravity shifts from provenance to plausibility. Users may get faster updates and broader coverage, yet verification becomes harder, editorial standards become a product decision rather than a community norm, and the risk of one company’s worldview imprinting itself on reference material grows. Teams that rely on neutral sources will need stronger citation policies and audit trails, and platforms will face pressure to label AI-generated reference text so readers know what they are consuming.

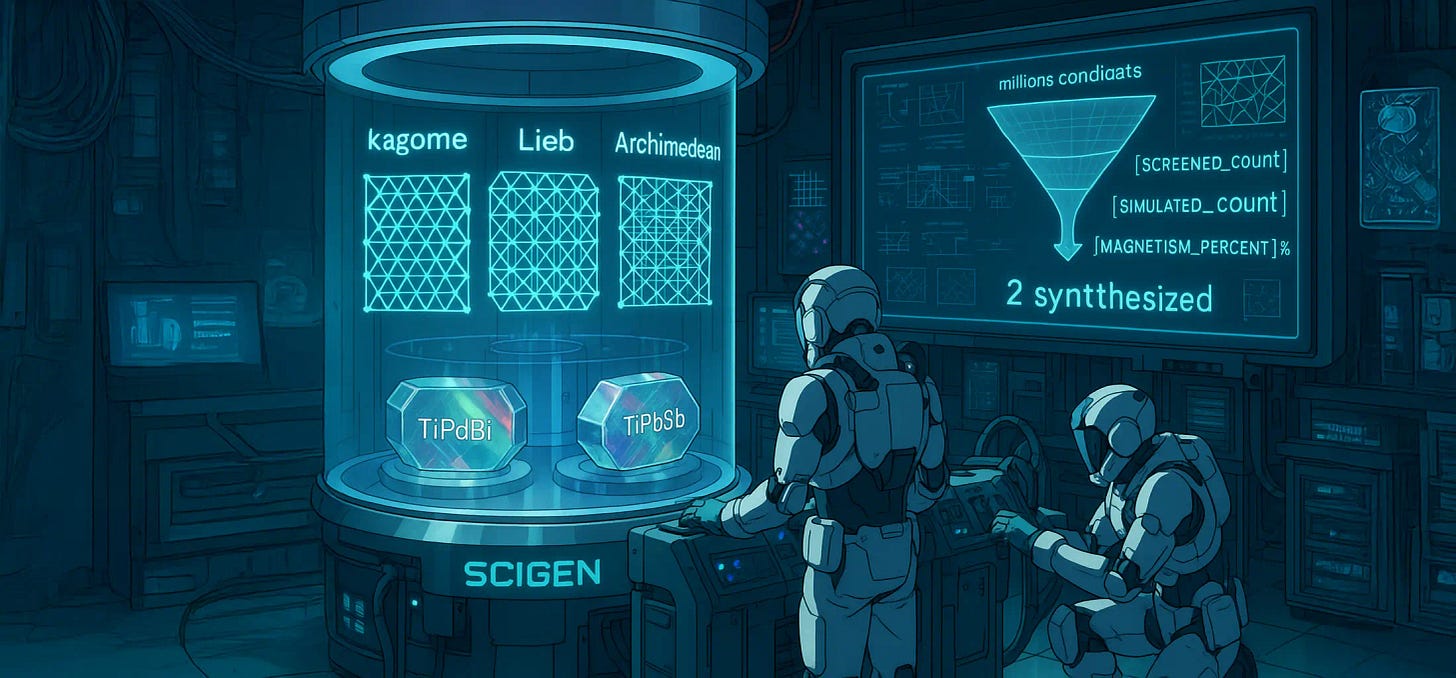

MIT AI Designs and Makes New Quantum Materials

MIT has demonstrated an AI method that designs quantum materials and delivers laboratory validation. SCIGEN forces popular generative models to follow specific geometric rules such as kagome, Lieb and Archimedean lattices that are known to produce exotic quantum behaviours. The team generated millions of candidates, screened about a million for stability, ran detailed simulations on 26,000, found signs of magnetism in 41 percent, and then synthesised two previously unknown compounds, TiPdBi and TiPbSb. The work was published on 22 September 2025 in Nature Materials and profiled by MIT News the same day.

Why it Matters:

Materials discovery has been a decade-long slog of trial and error for phenomena like quantum spin liquids and high-temperature superconductivity, with barely a dozen promising candidates identified by hand. SCIGEN flips the workflow by letting researchers aim models at the right structural motifs from the outset, compressing months of guesswork into a programmable search and proving end-to-end feasibility by making two real materials. If this approach scales, chipmakers, battery designers and quantum hardware teams could move faster from idea to prototype, while regulators and funders will need to prioritise validation and synthesis capacity since the bottleneck shifts from imagination to fabrication.

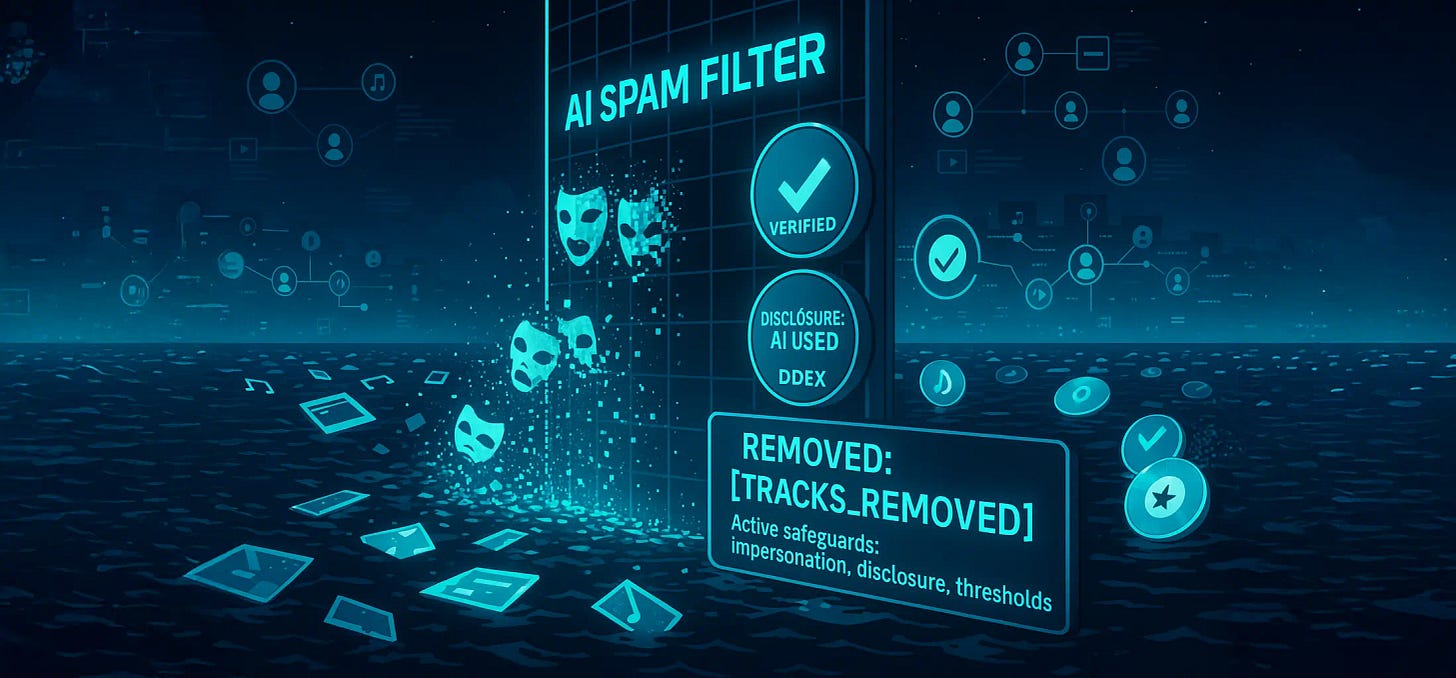

Spotify Just Axed 75 Million AI Spam Tracks. The Clean-up Has Begun

Spotify says it has removed over 75 million AI-generated “spammy” songs in the past 12 months and is rolling out global safeguards, including an AI-powered spam filter, stricter rules against voice-clone impersonation, and work with the DDEX standards body to disclose when AI is used in music. The company frames this as strengthening trust rather than punishing responsible creators, and outlets note the purge comes as generative tools make mass uploads trivial and the tally nears Spotify’s roughly 100 million licensed-track catalogue.

Why it Matters:

This strikes at the economics of playlist-farming and catalogue clutter that siphon royalties from human artists, so listeners should see cleaner recommendations and fewer junk tracks while rights holders face less dilution. The mix of disclosure standards, impersonation enforcement and existing minimum-stream thresholds tightens auditability and could become the template other platforms adopt, accelerating labelling norms for AI while preserving room for legitimate creative use. The real test is precision: filters must separate fraud from experimentation, or risk down-ranking innovative work under the guise of safety.

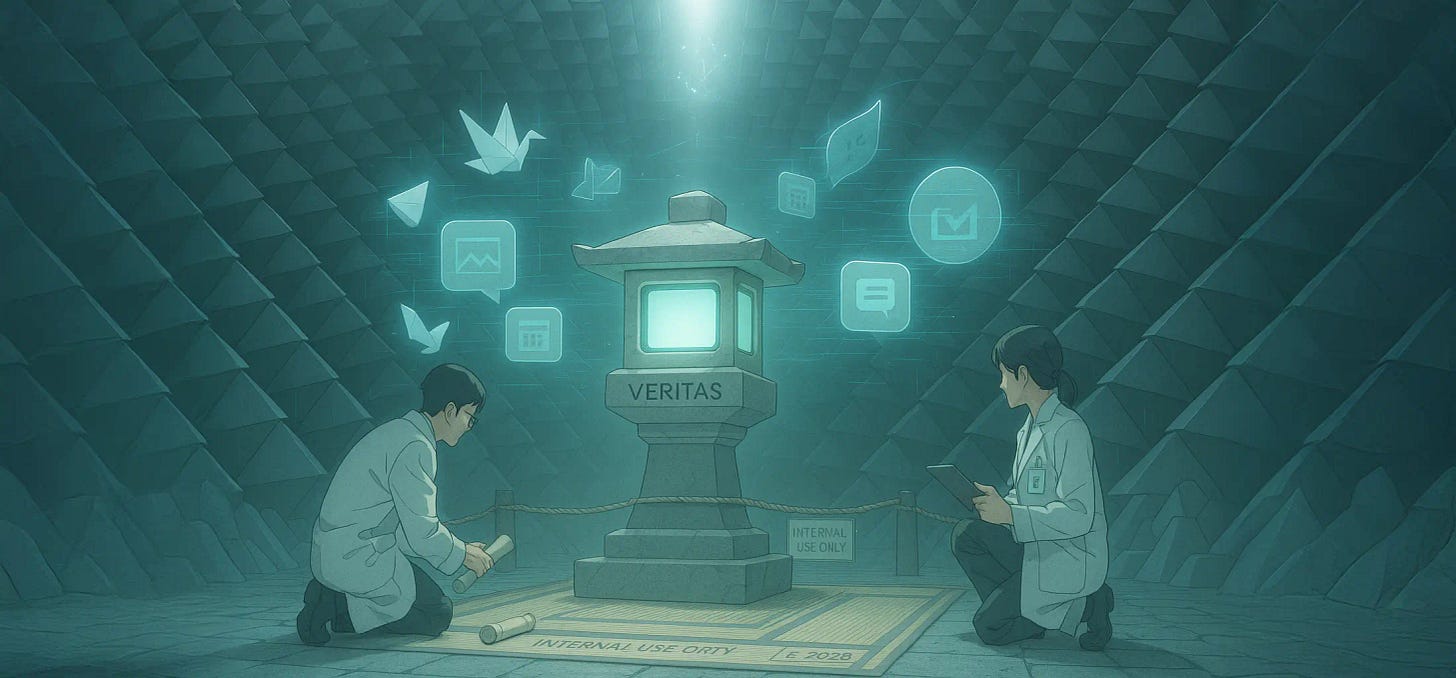

Apple Rebuilds Siri in Private

Apple has built an employee-only chatbot called Veritas to road-test a revamped Siri, and there are no plans to release it publicly while the major Siri upgrade slips toward 2026. Veritas lets staff trial longer, more contextual conversations and in-app actions such as searching personal data and editing photos, but for now it remains an internal harness rather than a product. Reporting also points to Apple exploring Gemini-powered search options as it reassesses its AI assistant roadmap.

Why it Matters:

iPhone and Mac users should not expect a leap in Siri’s intelligence in the near term, which slows Apple’s ability to match rivals on everyday assistant tasks and workflow automation. Veritas signals Apple is rebuilding the assistant from the inside out, prioritising privacy and controlled testing before broad release, yet the delay raises competitive and legal pressure as expectations rise. If Apple blends its own models with external options like Gemini under strict privacy controls, users could eventually see better answers and actions across apps, but the timing remains uncertain.