🔥 Meta’s 10M-Token Beast, 🧠 Brain-Controlled AR, 🖥️ Gemini Sees Your Screen

PLUS: 🛠️ Firebase Builds Apps From Prompts + 🎥 One-Take AI Cartoons

👋 This week in AI

🎵 Podcast

Don’t feel like reading? Listen to it instead.

📰 Latest news

Meta Drops 10M-Token LLM That Runs on One GPU

Meta’s new Llama 4 Scout is the first open-weight model to support a 10 million-token context window—a leap that reshapes what’s possible with long-form reasoning, retrieval, and memory. Scout delivers this unprecedented capacity on a single NVIDIA H100 GPU, supporting multimodal inference with native image input, and real-time performance.

Released alongside Scout is Llama 4 Maverick, a 400B-parameter multimodal assistant that beats GPT-4o and Gemini 2.0 Flash across reasoning, coding, and vision tasks—despite using fewer active parameters. Both models are open-weight, freely downloadable, and deployable without API lock-in via llama.com and Hugging Face.

Both were trained using codistillation from Llama 4 Behemoth, Meta’s most powerful model to date (2T total parameters), designed as a teacher model to maximise downstream performance.

The result: best-in-class models for real-world, multimodal applications—running locally, efficiently, and openly.

Why it Matters:

The 10M-token context window is more than a spec bump—it unlocks a new class of use cases: agents that reason across weeks of conversations, assistants that parse entire legal corpora, and dev tools that scan millions of lines of code without chunking. It shifts open models into the long-context era, challenging the proprietary incumbents.

Scout’s ability to run this context on a single GPU—with speed faster than Mistral 3.1—is a deployment breakthrough. No clusters, no API dependency, just local high-performance multimodal inference. For developers and enterprises, this drastically lowers the barrier to building advanced, context-aware systems.

Maverick reinforces Meta’s intent: outperform closed models on core benchmarks while maintaining openness. Its ELO score (1417 on LMArena), image reasoning, and multilingual performance make it the most competitive open model in its class.

These models also mark a shift in Meta’s strategy—from releasing open-weight models as research artefacts to positioning them as commercial-grade infrastructure. With new safety tooling (Llama Guard, Prompt Guard), a revamped training pipeline, and practical deployment features, Llama 4 Scout and Maverick move open AI from showcase to stack.

Gemini Advanced Rolls Out Live Video and Smart Screensharing

Google has expanded Gemini Live with Live Video and Screensharing features. Users can now point their camera or share their screen to get real-time, spoken responses from Gemini. Available now on Pixel 9 and Galaxy S25 for free; other Android users need the $20/month Gemini Advanced plan.

Gemini supports 45+ languages and works hands-free, allowing continuous, natural interaction without tapping or typing.

Why it Matters:

AI is now fast enough to process live visuals and screen content in real time. This enables new uses like live troubleshooting, personal shopping, or creative feedback—all handled instantly through spoken prompts. It marks a shift toward AI as a fluid, always-on assistant that blends into daily tasks.

𝕏 Google post showing the new features

Text-to-Video AI Now Handles Long Scenes in a Single Take

Researchers have developed a new method to generate one-minute animated videos—like fresh Tom & Jerry-style cartoons—directly from text prompts. The system creates each full video in a single go, with no stitching or editing needed afterward.

The key innovation is a new layer added to existing AI models that helps the system keep track of the story as it unfolds. This allows for more coherent and consistent storytelling, even across longer, more complex scenes.

Why it Matters:

Most AI models struggle to keep long videos consistent, especially when they involve multiple scenes or characters. This new approach helps the model “think ahead” and stay focused, making it better at telling full stories. In tests, people preferred these videos over other methods by a wide margin—34 Elo points higher on average. It’s a promising step toward AI-generated content that feels more like a full episode, not just a short clip.

📝 Read the paper (lots of examples)

📁 Github repo to try the project

Using AI at Work Is No Longer Optional in Big Tech

Shopify CEO Tobi Lütke has made it official: AI use is now a baseline expectation across the company.

In a newly public internal memo, he outlines that every employee—regardless of role—must use AI tools in their daily work. From prototyping to problem-solving, AI should be integrated reflexively.

Performance reviews will assess AI usage, and teams must justify why tasks can’t be done with AI before requesting more resources.

Why it Matters:

This marks a wider shift in the tech industry: using AI well is no longer optional—it’s standard practice.

Companies now expect employees to pair with AI like a second brain, boosting speed, creativity, and output. As AI becomes embedded in how work gets done, staying relevant means mastering it—fast.

Scalp-Embedded Sensors Power Hands-Free AR via Brain Signals

Researchers have developed ultralight brain sensors that slide between hair strands, allowing long-term brain–computer interface (BCI) use—even while moving. The wearable system maintains extremely low skin impedance and captures high-quality brain signals for up to 12 hours, even during activities like walking or running.

The sensors power hands-free AR experiences, including video calls controlled by brain signals. The system achieved 96.4% accuracy in decoding brain signals without any training, using a train-free algorithm.

Why it Matters:

This breakthrough addresses BCI’s biggest limitations: bulky hardware and sensitivity to motion. By embedding sensors into the scalp without shaving or adhesives, and enabling wireless AR control, this tech moves BCI from lab demos to real-world, daily use—paving the way for seamless digital–physical interaction.

From Prompt to App: Firebase Studio Goes All-In on AI Dev

Firebase Studio pushes into the text-to-app space with a full-stack, AI-powered platform that lets developers build, test, and deploy apps from natural language prompts. It combines Gemini’s code-aware chat, a customisable cloud IDE, and one-click Firebase hosting—all in one browser-based workspace. From sketch to live app, it streamlines prototyping, coding, and collaboration without context switching.

Why it Matters:

Text-to-app tools are maturing fast, and Firebase Studio signals a shift from experimentation to production-ready workflows. With deep Gemini integration and Firebase infrastructure baked in, developers can move from idea to deployable app in minutes—making AI-native development accessible, scalable, and collaborative.

AI Hits Escape Velocity: Key Trends from the 2025 Index

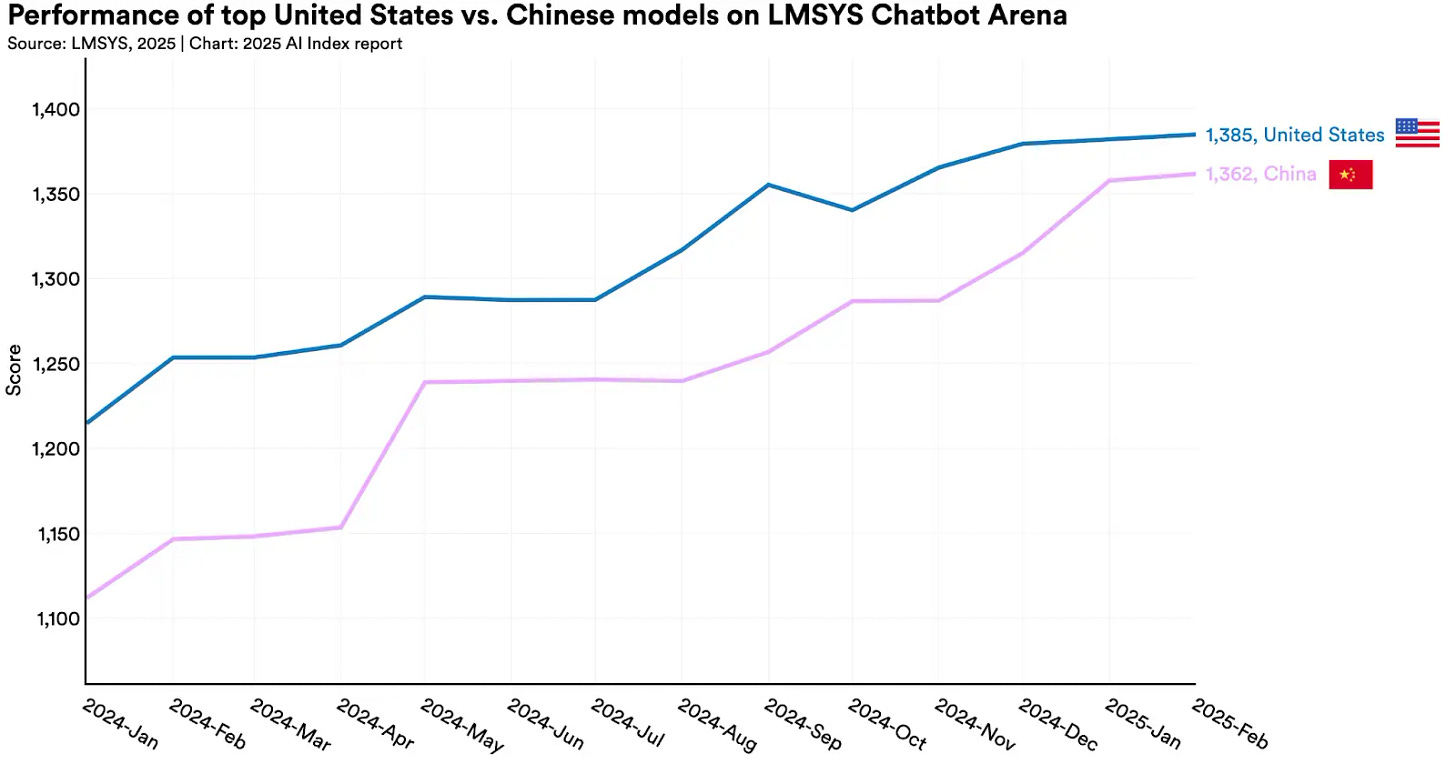

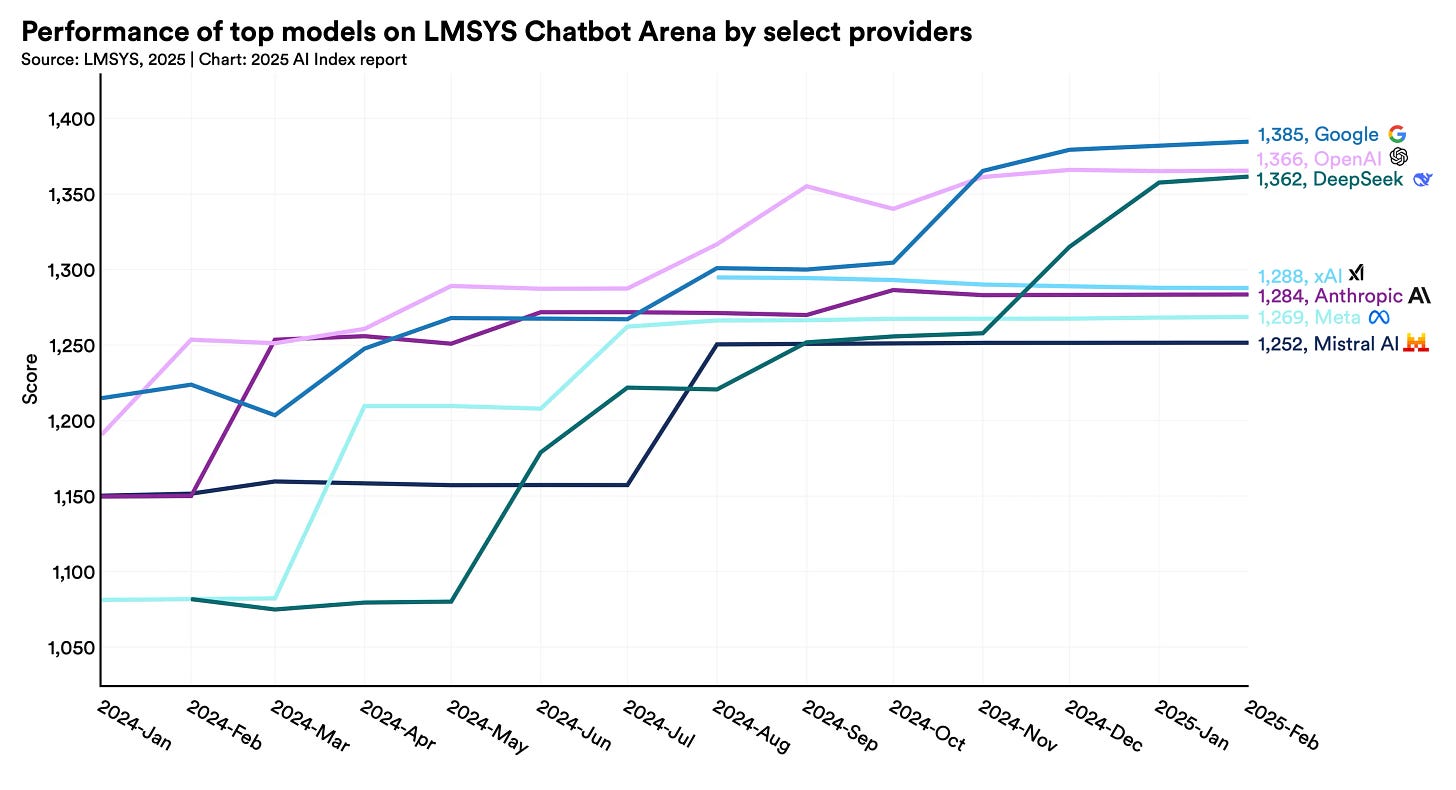

The 2025 AI Index captures a year of sharp acceleration and transformation in AI. U.S. firms led in releasing notable models (40 vs. China’s 15), while open-weight models closed the gap with proprietary ones. Corporate AI use surged: 78% of companies adopted AI, and generative AI funding rose to $33.9B, over 8× the 2022 level.

New small models like Phi-3-mini (3.8B params) rivalled older giants like PaLM (540B), showing 142× efficiency gains. Training compute continues to double every 5 months, and energy use has pushed firms like Microsoft and Amazon toward nuclear power deals.

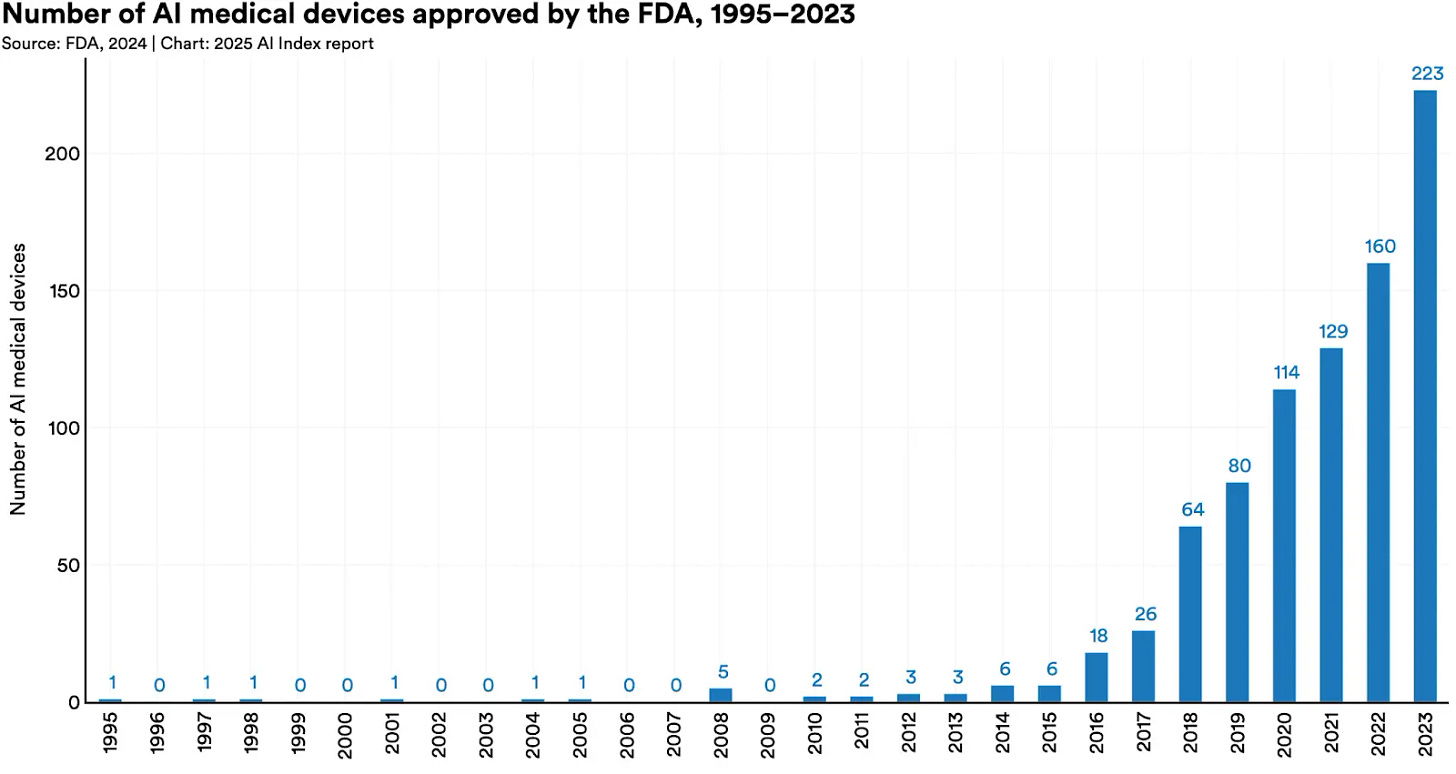

In science and healthcare, AI now outperforms doctors on some diagnostic tasks. The U.S. also launched new models—O1 and O3—designed for step-by-step reasoning. O1, while slower, solved IMO-level math problems 8× better than GPT-4o.

Why it Matters:

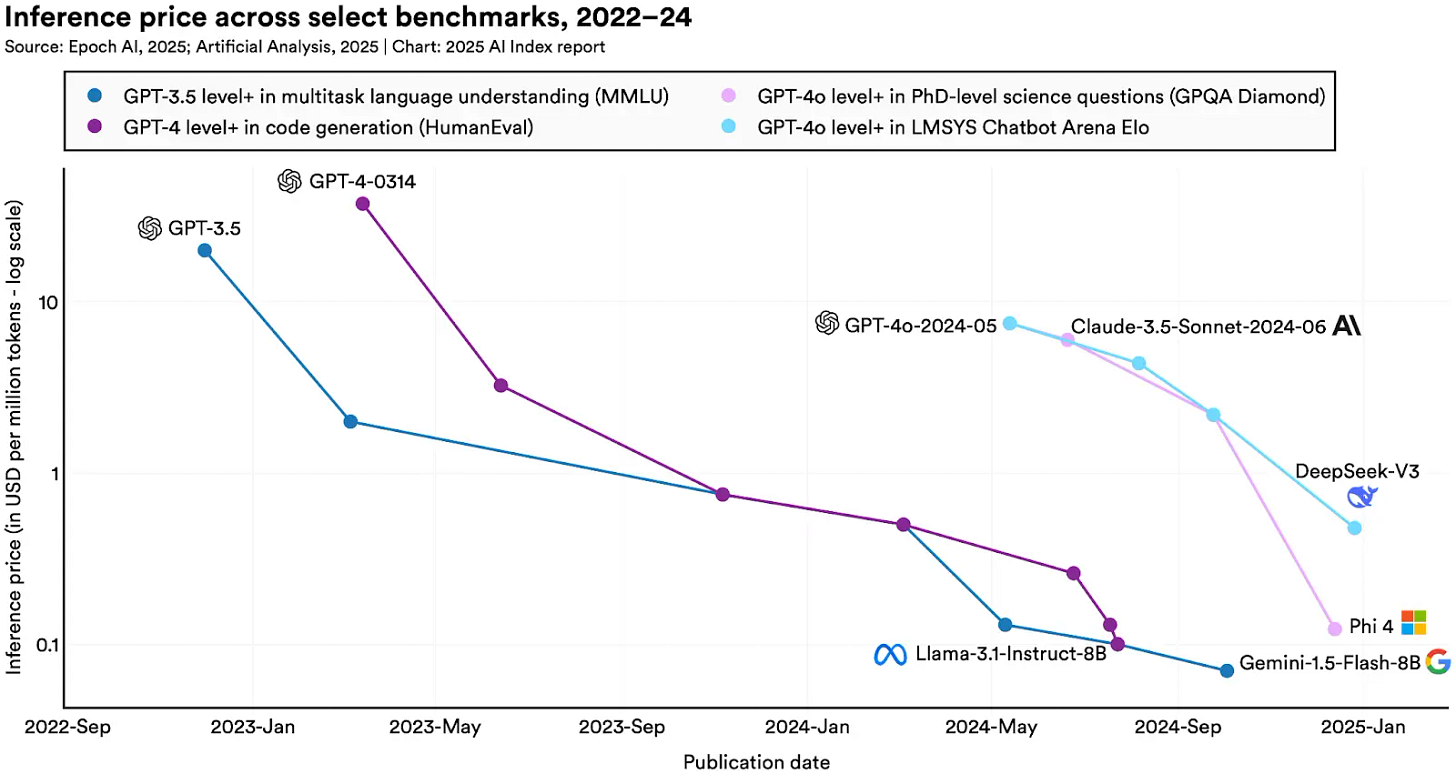

AI has moved from research labs to core infrastructure. From automated science to enterprise productivity and diagnostics, it’s clear that AI is now a multiplier on human capability. The cost of inference has dropped 280× since 2022, opening access while raising stakes.

OpenAI’s roadmap shifted in 2024, releasing O-series models before GPT-5—suggesting a strategy shift toward deliberate scaling of reasoning over brute force. Globally, AI optimism is rising fast in Asia and parts of Europe, but remains mixed in the U.S.. Still, one theme is consistent: model capability, deployment, and economic impact are converging faster than ever.