🧠 Google Threads Gemini Through Everything

From Search to Smart Glasses, AI is Now the Interface.

👋 This week in AI

🎵 Podcast

Don’t feel like reading? Listen to it instead.

📰 Latest Google News

All eyes on Google today. They’re flooding the market with fresh AI features, and the highlight reels are worth a look—they hint at an AI layer on everything we touch.

Microsoft’s up next.

Google I/O 2025: Gemini Threads AI Through Search, Code and Glasses

Google placed Gemini at the core of every reveal, focusing on speed, richer media, and agent-like actions that reach from code editors to wearables.

Launch Highlights:

Revamped Project Astra – Adds native voice dialogue, long-term memory, on-device UI control and content retrieval. Features will surface in Gemini Live, Search, a Live API for developers and Android XR glasses.

Agent Mode in Gemini app – An early-preview feature (US, AI Ultra Plan) that plans and executes multistep tasks online. Uses live browsing, Google-app data and the Model Context Protocol to, for example, find flats on Zillow and book viewings without manual clicks.

Veo 3 generative video – Text-to-video now adds native soundtracks, keeps character consistency and produces photorealistic eight-second clips ready for editing.

Flow AI filmmaking tool – Combines Veo 3 clips and Imagen stills, lets creators adjust camera moves and stitch multiple scenes into longer videos through simple prompts.

Gemini 2.5 Pro Deep Think mode – Parallel reasoning explores several hypotheses before responding, topping MMMU multimodal, LiveCodeBench coding and 2025 USAMO maths benchmarks.

Stitch prompt-to-UI generator – Converts text prompts into interface mock-ups and exports editable files directly to Figma, accelerating design iterations.

Gemini Diffusion coding model – Generates code at 909 tokens per second, about ten to fifteen times faster than traditional autoregressive models, and solves non-causal maths prompts that defeat GPT-4o.

Virtual Try-On in Shopping – Users upload a full-body photo and see garments realistically draped on their image inside Search, launching in US Labs.

Jules coding agent – Web app powered by Gemini 2.5 Pro edits GitHub repos from plain-English instructions inside a Google-hosted VM, no local setup required.

Imagen 4 image model – Produces richer colour, sharper detail and precise typography while generating faster than earlier versions.

AI Mode in Google Search – Rolling out to US users, replaces link lists with conversational answers, deep research threads and built-in task execution such as ticket purchases.

Gemini scale milestones – 400 million monthly active users, 480 trillion tokens processed each month, seven million developers on the API.

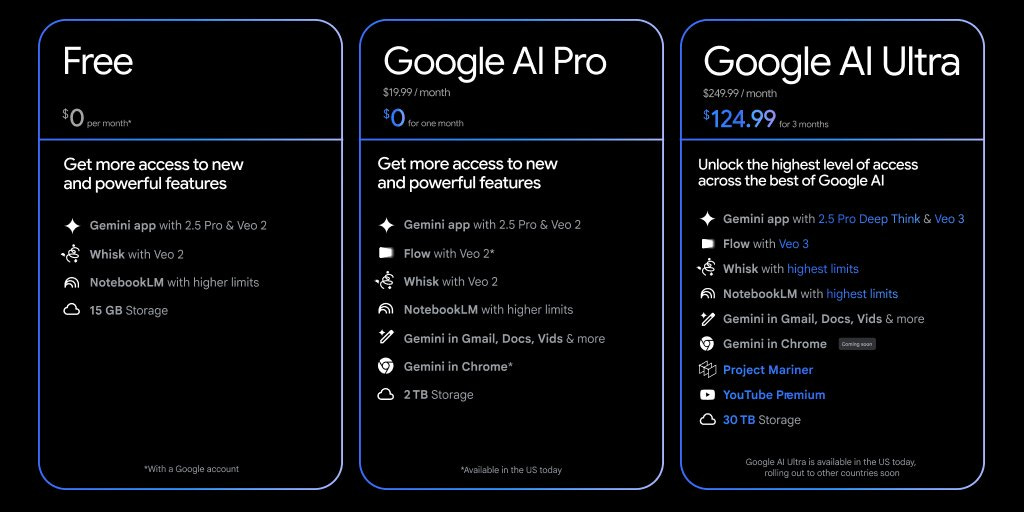

AI Ultra Plan subscription – Costs £249.99 per month, offers higher usage caps, 30 terabytes of cloud storage, ad-free YouTube and early agent tools like Project Mariner.

Android XR smart glasses – Prototype frames translated speech on-lens and answered contextual queries; commercial models coming via Samsung, Gentle Monster and Warby Parker.

Real-time translation in Google Meet – Multimodal Gemini listens, transcribes and overlays subtitles in other languages during live calls, removing language barriers for distributed teams.

Why it Matters

Google is turning Search from an index into an assistant. AI Mode lets Gemini interpret intent, gather context, and act inside the results page, keeping users within Google’s ecosystem for both answers and transactions, and preserving advertising relevance by pairing generated responses with hyper-targeted ads.

Across products, Gemini powers code generation, design, meeting translation, shopping, and wearables, creating a seamless layer of intelligence that knows the user’s context and can shift between text, images, video, and tasks.

This weave positions Google to capture usage data continuously, monetise heavy demand through premium tiers, and anchor future interactions in ambient devices where Gemini becomes the default interface to both information and action.

📝 Check out all the announcements on Google's I/O page

AlphaEvolve Proves AI Can Generate Original Ideas: From Extra Compute to Record-Breaking Algorithms

AlphaEvolve shows that AI can create ideas humans have never found. Using Gemini models, the agent wrote a new way to multiply number grids that cuts one calculation out of every forty-nine, a record that stood for 56 years.

The same system re-tuned Google’s datacentre scheduler, freeing 0.7 percent of global compute, and sped up key Gemini training steps by 1 percent. It also found faster code for attention layers, giving up to 32.5 percent more speed.

Across fifty research problems, it matched experts three-quarters of the time and bettered them in one-fifth of cases. Early Access for academics is on the way.

Why it Matters:

Many people assume large language models only rearrange what they have read. AlphaEvolve proves otherwise: it invents answers that can be checked by a computer, already saves energy in live services, and offers fresh approaches to stubborn research puzzles.

Pairing AI creativity with automated proof could shorten development cycles, shrink datacentre footprints, and give scientists a tireless partner for idea generation.