Elon sues OpenAI, Google's Gemini "racist" and text-to-video models level up

Weekly recap of what's happened in AI

Welcome to the biggest headlines in AI this week.

I scroll so you don’t have to 👇

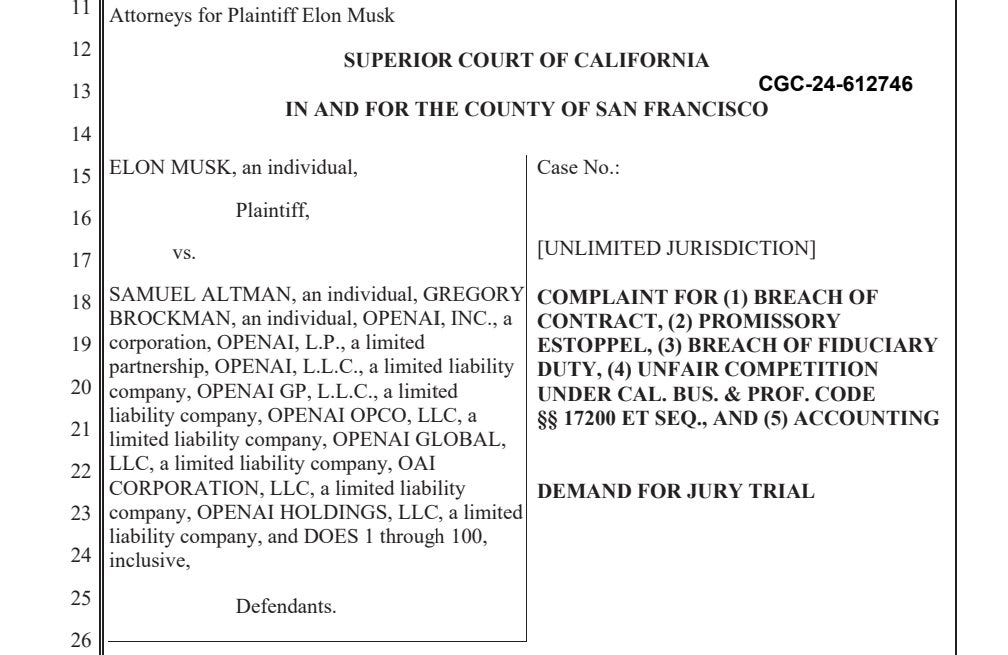

Elon Musk sues OpenAI

Musk has filed a lawsuit against OpenAI for breach of contract with Microsoft, breach of fiduciary duty and unfair business practices, and is asking for OpenAI to revert back to open source.

His argument is that OpenAI has already achieved artificial general intelligence (AGI) which breaks their contract with Microsoft and reverts them back to not for profit status.

Interestingly, this is also speculated to be the reason why OpenAI’s board ousted Sam Altman before the coup failed. Rumours of a model capable of logic and reasoning by the name of Q* are said to be the cause of the board's panic.

LLMs are prediction machines; from the data they’ve consumed, they can predict the probability of the next most likely outcome, however when this comes to mathematics or genuine reasoning LLMs tend to fail, which was why many speculate Q* was a step closer to AGI.

The fued between Elon Musk and Sam Altman is intensifying with Sam replying to a tweet from 2019

Elon Musk was a founder of OpenAI, for more details see:

A Short History of OpenAI - Chamath Palihapitiya

Sam Altman's first emails to Elon about starting OpenAi

Google’s Gemini model labelled racist and share price slumps

Due to their dominance in search and the desire to avoid regulatory backlash, Google often proceeds cautiously, despite publishing the transformer paper enabling LLMs in 2017, OpenAI commercialised the technology first causing Alphabet Inc to lose $100 billion in market value. Since then, Google has been trying to catch up. However, they have struggled to change, leading to excessive guard rails in their AI models.

The key issue they’re facing now is that their Gemini models have been trained from the ground up to ensure extreme diversity and political correctness, leading to outputs that vary from historical inaccuracies to statements like “It is not possible to say who definitively impacted society more, Elon tweeting memes or Hitler”.

The issues started to arise when users asked Gemini to generate images of people who were known to be historically white, like the founding fathers of America, resulting in images and text being racially diverse regardless of context or historical accuracy, this seems particularly true when the subject matter is about white people, leading to people saying the model is racist.

Google’s left leaning culture has been blamed for creating this bias in its models, and many believe the core mission of Google to “…organise the world's information and make it universally accessible and useful” has been superseded by activists within their workforce.

Personally, I believe this is the result of Google being too risk adverse and layering in excessive safe guards at the expense of the user experience. This is a huge PR issue for Google and even though many of their AI products are excellent, they are seen as always being on the back foot.

Google’s CEO Sundar Pichai responded in leaked internal memo:

“I want to address the recent issues with problematic text and image responses in the Gemini app (formerly Bard). I know that some of its responses have offended our users and shown bias – to be clear, that’s completely unacceptable and we got it wrong.

Our teams have been working around the clock to address these issues. We’re already seeing a substantial improvement on a wide range of prompts. No AI is perfect, especially at this emerging stage of the industry’s development, but we know the bar is high for us and we will keep at it for however long it takes. And we’ll review what happened and make sure we fix it at scale.

Our mission to organize the world’s information and make it universally accessible and useful is sacrosanct. We’ve always sought to give users helpful, accurate, and unbiased information in our products. That’s why people trust them. This has to be our approach for all our products, including our emerging AI products.

We’ll be driving a clear set of actions, including structural changes, updated product guidelines, improved launch processes, robust evals and red-teaming, and technical recommendations. We are looking across all of this and will make the necessary changes.

Even as we learn from what went wrong here, we should also build on the product and technical announcements we’ve made in AI over the last several weeks. That includes some foundational advances in our underlying models e.g. our 1 million long-context window breakthrough and our open models, both of which have been well received.

We know what it takes to create great products that are used and beloved by billions of people and businesses, and with our infrastructure and research expertise we have an incredible springboard for the AI wave. Let’s focus on what matters most: building helpful products that are deserving of our users’ trust.”

Mistral AI partners with Microsoft

In an effort to diversify from OpenAI, Microsoft has partnered with Mistral AI, a French startup who has released a model akin to GPT-4. Microsoft let’s customers use Mistral through Azure and has given them $16 million in funding. An interesting side note is that the EU watchdog is examining the deal, big tech companies' mergers have been under increasing scrutiny recently. (Figma/adobe and Activision/Microsoft to name a few). Mistral also just released a competitor to ChatGPT - Le Chat.

Pika Labs introduces their lip sync feature

One of the first movers in text-to-video generation, Pika Labs released their lip sync feature, this appears to be the new frontier in text-to-video.

With the release of SORA and open source ByteDance (TikTok) models, I wonder how they will compete.

Alibaba releases model which uses an image and soundtrack to generate lip synced videos

Chinese companies are ramping up their activity in the GenAI space with Alibaba’s new EMO: Emote Portrait Alive model. With a single image, the model creates a realistic lip synced video. Very impressive.

Consistency across characters and scenes is a step forward for text-to-video GenAI

Lightricks introduced LTX Studio, an AI-powered filmmaking tool that assists creators in visualizing stories. The web-based tool generates scripts, storyboards, and customizable short clips, addressing challenges like continuity across characters and scenes.

Elon hints at using Midjourney for X

X has released Grok, their generative AI. Grok has access to live data from X and therefore has an edge against other models. It appears they are looking to rollout image generation features in collaboration with Midjourney. In my opinion Midjourney still is the leader in image gen.

"We are in some interesting discussions with Midjourney and something may come of that, but either way, one way or another, we will enable AI art generation on the X platform.” - Elon Musk

That’s a wrap!

Reply to this email to tell me what you think and feel free to share it.

Great stuff!

What a great read! I really enjoyed the simple clarity of the articles that were well rounded and articulate. Good coverage of a variety of articles and sources. I’ll keep watching this space!