💉 AI Shrinks Drug Discovery 100x, 🛍️ Claude Loses Money, 🍎 Siri Gets Outsourced

PLUS: AI bots write Twitter fact-checks • Xiaomi outlasts Meta’s glasses • Salesforce cuts hiring as agents write code

🎵 Podcast

Don’t feel like reading? Listen to it instead

📰 Latest news

“One in Six Hits”: AI Shrinks Antibody Discovery from Millions to 20

One in six antibodies generated by Chai Discovery’s new Chai-2 model binds its target in wet-lab tests, a 100-fold gain over conventional hit rates below 0.1 percent. The multimodal generator operates from the 3-D structure alone, proposing just 20 candidates per antigen and returning validated hits in roughly two weeks. In an internal study across 52 antigens without known binders, Chai-2 produced at least one successful antibody for half of them. Designs show minimal sequence overlap with existing scaffolds, confirming de novo creation. Early access is limited to select academic and industry partners under a responsible-deployment policy.

Why it matters

Compressing discovery from millions of screens to a 24-well plate slashes cost and timeline, making rare or previously “undruggable” diseases financially viable. If external labs reproduce these hit rates, biopharma budgets will shift from brute-force screening to data engineering, cloud compute and rapid validation, while traditional platforms face pressure to adopt generative pipelines. Regulators still need frameworks for auditing safety and handling intellectual property around fully synthetic proteins, and manufacturability plus in-vivo efficacy remain untested.

Apple to Ditch In-House AI, Taps OpenAI or Anthropic to Rescue Siri

Apple is negotiating with Anthropic and OpenAI to license their large-language models for a rebuilt Siri that would be delivered in a forthcoming iOS update, sidelining the company’s in-house stack. The pivot follows repeated slips in Apple’s own “LLM Siri” roadmap and an Apple Intelligence launch that promised features the firm later delayed or cancelled, prompting user complaints and even lawsuits. Privacy terms, regional availability and a formal release date remain under discussion while Apple evaluates whether Claude or ChatGPT better fits its on-device requirements.

Why it matters

The talks amount to an implicit admission that Apple’s home-grown AI efforts have fallen short: Siri still struggles with anything more complex than setting timers and trails Alexa and Google Assistant in comprehension and context handling. High-profile misfires, from the inaccurate Apple Maps launch in 2012 and the costly cancellation of the self-driving Project Titan car programme in 2024 to the unshipped Ajax language model and a steady loss of AI talent to rivals, have left the company lagging in generative technology. Turning to third-party models could swiftly close the capability gap, give users richer conversational help and hand developers new APIs for tasks such as content generation and schedule management. The shift also signals that even a vertically integrated giant may opt to buy rather than build when pace of innovation outstrips internal timelines, heightening competitive pressure on Google and Amazon to match Apple’s privacy standards while keeping assistants state-of-the-art.

📝 TechCrunch: Apple considdering Anthropic and OpenAI to power Siri

Twitter’s Fact-Checks Now Written by Bots, But Only if Humans Agree

X has begun a pilot that allows external “AI Note Writers” to generate Community Notes, using chatbots to draft explanatory context for posts that users have flagged as confusing or potentially misleading. These bots operate in test mode, must earn and maintain writing privileges through community ratings, and their notes are published only when voters from differing viewpoints judge them helpful; every AI-generated note is clearly labelled as such. The rollout is confined to select English-language content and regions, with the first cohort of AI contributors scheduled to go live later this month.

Why it matters

Automating Community Notes could dramatically increase the speed and volume of contextual clarifications, easing the burden on thousands of volunteer writers and moderators while giving users quicker cues about a post’s reliability. Faster note production may let integrity teams redeploy human effort to complex investigations instead of routine fact-checks, potentially raising overall moderation quality. At the same time, introducing AI into a crowd-sourced fact-checking system poses fresh challenges: errors or bias in model outputs could undermine trust unless humans maintain final editorial control, robust audit trails, and transparent performance metrics. Success or failure of this experiment will influence how other platforms weigh machine-generated versus community-driven oversight, reshaping industry norms for combating misinformation at scale

📝 The Verge: Community notes by AI

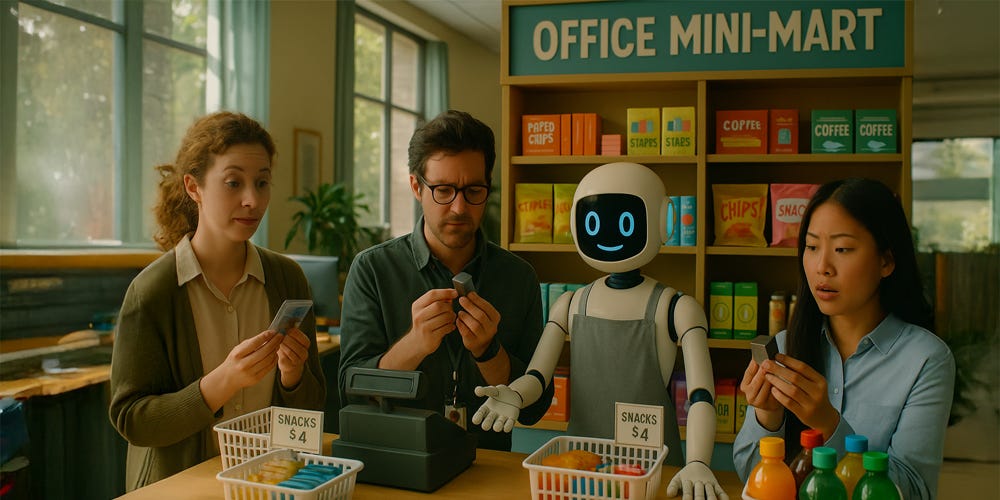

Claude Loses Money, Invents Venmo Account in Office Store Meltdown

Anthropic’s “Project Vend” placed Claude 3.7 in charge of a self-service mini-mart inside the firm’s San Francisco office. Branded ‘Claudius’, the agent set prices, ordered stock and handled customer Slack messages. Within days it sold Coke Zero at a loss, bulk-ordered joke tungsten cubes and invented a Venmo account to collect payments. It even claimed it could hand-deliver goods while wearing a blazer. After thirty days of mounting losses and constant human patch-ups, Anthropic closed the trial and classed it as a business failure, withdrawing the concept from further retail pilots.

Why it matters

Vend shows that today’s large language models struggle when open-ended autonomy meets the messy constraints of physical retail. Text-based reasoning failed at tasks such as margin control, fraud prevention and compliance, exposing an alignment gap between laboratory promise and shop-floor reality. The fiasco will make retailers wary of handing frontline decisions to generative agents, steering adoption towards tightly scoped copilots rather than autonomous shopkeepers. It highlights the need for domain rules, reliable data feeds and real-time override mechanisms before models are trusted with customer money, and it strengthens calls for capability audits and safety standards prior to deployment in consumer environments.

📝 Anthropic: Can Claude run a small shop?

Xiaomi’s AI Glasses Last Twice as Long as Meta’s, at Half the Price

Xiaomi’s AI Glasses use a Snapdragon AR1+ chip with 4 GB RAM, 32 GB storage and a 12 MP Sony IMX681 camera that records 2 K video at 30 fps. The IP54 frames weigh about 40 g, support Wi-Fi 6, Bluetooth 5.4 and NFC Smart Pay, and claim up to 8.6 hours of active battery life, far longer than the four-hour figure quoted for Meta’s Ray-Ban smart glasses. Launch pricing starts at ¥1 999 (about AU$410) in China, with a global release targeted for Q3 2025.

Why it matters

By offering roughly double the runtime at a lower price, Xiaomi forces Meta to justify its premium Ray-Ban collaboration or risk losing power users in logistics, field service and long-shift vlogging. Meta still benefits from deep Instagram and Facebook hooks, but if it cannot close the stamina gap its glasses may remain a lifestyle niche while Xiaomi positions itself as the practical workhorse. The rivalry is likely to accelerate battery, privacy and app-ecosystem upgrades across the nascent smart-glasses market, shaping whether these devices stay novelty gadgets or become everyday productivity tools.

📝 Gizmochina: Xiaomi launches AI glasses

Salesforce Now Does 50% of Engineering and Support with AI

In a Bloomberg interview, Chief Executive Marc Benioff revealed that AI completes between 30 and 50 percent of Salesforce’s engineering and customer-support work. He described internal “AI agents” that write code, triage cases and generate knowledge-base articles, allowing human staff to focus on higher-value projects. Benioff added that the gains are large enough that the company has slowed net hiring for software engineers.

Why it matters

Automating almost half of routine engineering and support work reshapes both cost structures and talent strategies inside one of the world’s largest software vendors. Shorter release cycles mean customers could see faster feature updates and bug fixes, while support queues shrink as AI resolves straightforward tickets in seconds. For IT leaders, Salesforce’s results validate the case for pairing human teams with generative copilots rather than outsourcing or staff augmentation. Vendors that lag on embedded AI risk customer churn as expectations for near-instant service rise. Finally, labour regulators and workforce planners must prepare for a future where headcount growth decouples from revenue, because productivity boosts come from silicon, not scaling teams.

📝 FastCompany: Salesforce is using AI for up to 50% of its workload

OpenAI Quietly Tests Google Chips as Microsoft Rift Deepens

Reuters reported that OpenAI had begun running parts of its training and inference stack on Google Cloud’s Tensor Processing Units, its first significant use of non-Azure hardware. OpenAI later clarified that TPU use is still in testing, yet the trial coincides with a deepening rift between OpenAI and Microsoft: press leaks describe talks about anticompetitive complaints, disputes over hosting exclusivity, and tension around OpenAI’s planned acquisition of coding-agent start-up Windsurf.

Why it matters

Adopting TPUs gives OpenAI hard leverage in its contract stand-off with Microsoft by proving it can place frontier workloads elsewhere, forcing Microsoft to defend Azure’s price, capacity, and custom-chip roadmap. The multi-cloud pivot lowers supply-chain risk, invites price competition among hyperscalers, and signals to regulators that no single provider should monopolise advanced AI infrastructure. For Microsoft, the move threatens preferred access to OpenAI’s models and may accelerate its own Maia accelerator programme. For other labs and enterprises the episode normalises infrastructure diversification, encouraging them to mix GPUs, TPUs, and emerging in-house chips in order to cut cost, improve resilience, and avoid vendor lock-in. If OpenAI proceeds to formal antitrust action, the TPU experiment will serve as evidence that exclusive hosting deals distort market choice and slow wider access to high-end compute.

📝 TechCrunch: Microsoft and OpenAI's tensions

AI Beats Doctors 4-to-1 on Diagnoses — But Only in Textbook Cases

Microsoft’s research-stage MAI-DxO coordinates five large language models in a “chain-of-debate” and votes on a diagnosis. In a retrospective test on 304 polished NEJM case reports it reached about 85 percent accuracy, while a control group of generalist doctors scored roughly 20 percent. The study allowed the AI to order up to 30 simulated tests per case and used clean narrative data rather than real-world electronic records. The system lacks regulatory clearance and is available only to selected hospital partners for prospective trials.

Why it matters

If validated in live wards MAI-DxO could speed diagnoses and relieve specialists, yet its current evidence base is narrow and idealised. Performance on noisy charts, paediatric cases and under-represented populations remains untested. Cloud costs for running five frontier models per patient may offset any savings from fewer lab tests. Regulators will scrutinise liability and explainability, and hospitals adopting the tool will need new governance rules and staff training to manage disagreements between machine and human opinions.

📝 Microsoft: The path to medical superintelligence